A long long time ago, I faced the problem of installing an assembly on the GAC from code. And I even posted the code in my blog

http://blogs.artinsoft.net/mrojas/archive/2008/04/09/install-assembly-gac-with-c.aspx

Recently Jose Cornejo wrote me on my blog because the code was not working, and he finally showed me the right way to do it. It was sooo easy.

His solution was:

1. Add a reference to System.EnterpriseServices.dll to your project

2. Write code like the following:

System.EnterpriseServices.Internal.Publish p = new Publish();

p.GacInstall(@"C:\ClassLibrary1.dll");

p.GacRemove(@"C:\ClassLibrary1.dll");

Thanks a lot Jose for your help.

These interesting functions have a long history, since the BASIC language, QuickBasic

and earlier versions of Visual Basic. There isn’t much documentation on them but you

can look at very good reference as the one published by Matthew Curland

titled Unofficial Documentation for VarPtr, StrPtr, and ObjPtr

Well enough history, now let’s get back to the the migration part. If you’re reading this

post it might be, because you have VarPtr, StrPtr or ObjPtr calls in your code and you

want to move those calls to C#.

Well we have good and bad news.

Bad news are that the .NET world is a lot different than VB. Remember that your code

is running in the managed sandbox, and to get the address of variable you are probably

dealing with unmanaged memory, so some things might not work.

Good news are that I am one of those that believe that there are no imposibles,

it’s just a matter of the cost of developing the solution :)

Let’s see at some alternatives:

VarPtr can be use to get pointers to vaiables. This can be solved using Unsafe code:

VB6

Dim l As Long

Debug.Print VarPtr(l)

C#

class Program

{

[DllImport(@"DllTest1.Dll")]

static extern void Foo(IntPtr p);

static void Main(string[] args)

{

int l;

unsafe

{

int* pointerToL = &l;

Foo((IntPtr)pointerToL);

// Print the address stored in pointerToL:

System.Console.WriteLine(

"The address stored in pointerToL: {0}", (int)pointerToL);

}

}

}And The implementation for Foo is a C function like:

extern "C"

{

__declspec(dllexport) void Foo(int* data)

{

*data = 100;

}

}Unsafe code like this will work for VarPtr cases where you have primitive types,

like int, short, char variables.

Structures are more tricky and you will require pinning the memory to avoid some

other problems. But in general doing Interop with structures is very tricky and

I will publish another post about this.

Unsafe code must be enabled at the assembly level and the assembly might need to

be signed.

StrPtr is very similar to VarPtr but it mostly to provide efficient marshalling

to Unicode functions. In most of cases like:

Declare Sub MyUnicodeCall Lib "MyUnicodeDll.Dll" _

(ByVal pStr As Long)

Sub MakeCall(MyStr As String)

MyUnicodeCall StrPtr(MyStr)

End SubThe StrPtr declaration is no longer needed because the .NET Interop mechanism can

handle most of this marshaling automatically.

ObjPtr is the most tricky of all because it can be used in COM scenarios to get

pointers to Interfaces implemented by a class.

In .NET scenarios this will involve only using classes that are exposed by COM.

I have use code as the following

for some of those cases:

Object myComObject = null;

//..init code

IntPtr pIUnknown = Marshal.GetIUnknownForObject(myComObject);

IntPtr pIDesiredInterface = IntPtr.Zero;

Guid guidToDesiredInterface = new Guid("XXXXXXXX-XXXX-XXXX");

Marshal.QueryInterface(pIUnknown,

ref guidToDesiredInterface,out pIDesiredInterface);

As always there are exceptions to the rule. These are just some general solutions.

Directly accessing the memory is really something not desirable in a .NET application and in most

cases you should remove that code for something else, but if you can’t I hope these examples

guide you in this process.

Microsoft's Patterns & Practices group released last week a new version of the Application Architecture Guide. This book provides you with the best practices in the design of .NET applications. I recommend you read this when planning the evolution of your migrated applications after you reach functional equivalence in the .NET Framework.

Download the Application Architecture Guide, version 2.0

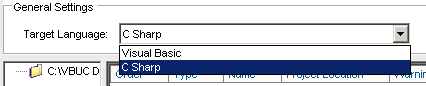

The Visual Basic Upgrade Companion is able to generate both C# and Visual Basic .NET code from the original Visual Basic 6.0 code base. Thus, when doing a migration project with our tools, you can choose either language. This decision is a challenge itself, especially if you aren't doing any .NET development before the migration. You have to measure the skill set of your staff, and how comfortable they will feel with the transition from VB6 to either language.

Here are some points that are normally thrown around when comparing C# and VB.NET:

- Support: Both languages are well supported by Microsoft, and are first-class citizens on the .NET Framework. Neither one will go the way of the Dodo (or the way of J#, for that matter).

- Adoption: C# seems to have higher adoption than VB.NET. A completely unscientific and in no way statistically valid quick search on the books section of Amazon, however, returned 15,429 results for C#, and 2,267 results for Visual Basic .NET. Most of our customers migrate to C# instead of VB.NET as well. So there may be some truth in this.

- Perception: C# was developed from the ground up for the .NET Framework. This has affected VB.NET's mind share, as C# is viewed as the new, cool language in town. C# is also seen as an evolution of C and C++, which are considered more powerful languages. And we've all heard at least one Visual Basic 6.0 joke - which means that even though VB.NET is a completely different beast, the "VB" name may work against it.

- Familiarity: Visual Basic .NET's syntax is very similar to VB6's, so it is assumed that VB6 developers will feel right at home. This may or may not be true, since they will need to learn all the differences of the new environment, not only the syntax.

- Cost: It also looks like people with skills in C#, on average, earn more than those with skills in VB.NET. This is also something to keep in mind when deciding which language to choose.

.NET allows you to mix programming languages, even on the same Solution. So you could have some developers work in C#, while other work in VB.NET - basically let them use the language they feel more comfortable with. DON'T. This may become a maintenance nightmare in the future. You should definitely standardize on just one language, either C# or VB.NET, and stick with it. That way you'll save time and resources, and overall have a more flexible team.

Also, keep in mind that the biggest learning curve when coming from the VB6 world won't be the object orientation of VB.NET or the curly bracket syntax of C#. It will be learning the .NET Framework. That is what you should plan training for, not for a particular language. Learning the syntax for a new programming language is pretty trivial compared to the effort required to correctly use all the APIs in .NET.

All in all, you can do anything on either language. I personally like C# better, mainly because I started with C, C++ and Java before moving on to .NET development. Coding is C# is "natural" for me. But you can develop any type of application in C# or VB.NET, as there's no meaningful difference under the covers.

When planning the migration of large applications you may want to use a phased approach. This means that as you migrate the first portions of your application to .NET, you will need to keep the interaction with the Visual Basic 6.0 code. Depending on your application's architecture, you can use one of the following approaches:

- Interop Forms toolkit: Now at version 2.0, it simplifies the process of embedding .NET forms and controls inside VB6 applications. It is recommended for GUI-intensive applications

- Binary Compatible .NET DLLs: This technique allows you to expose .NET components through COM. It is recommended for multi-tier apps, especially if you want to migrate the back end before you migrate the front end. It allows VB6 and ASP applications to continue using the same components even after they are migrated to the .NET Framework.

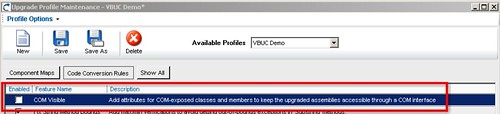

The current version of the Visual Basic Upgrade Companion is able to add binary compatibility information to these components. The VBUC does this automatically to ActiveX DLLs, and it is as easy as activating the "COM Visible" feature in the Upgrade Profile:

Once you have the .NET code, you need to check Register for COM Interop in the Project Properties page in Visual Studio.NET, and then you are all set. Your VB6 and ASP applications will continue working with the newly migrated .NET components, transparently, while they await their turn for a migration.

You can read more about this feature on the COM class exposure page.

COM

The idea is to make a class or several classes available thru COM. Then the compiled dll or the TLB is used to generate and Interop Assembly and call the desired functions.

With this solution the current C++ code base line can be kept or might require just subtle changes.

Calling a function thru com is involved in a lot of marshalling and can add an additional layer that is not really needed in the architecture of the solution.

Creating a Managed Wrapper with Managed C++

The idea with this scenario is to provide a class in Managed C++ that will be available in C#. This class is just a thin proxy that redirects calls to the Managed object.

Let’s see the following example:

If we have a couple of unmanaged classes like:

class Shape {

public:

Shape() {

nshapes++;

}

virtual ~Shape() {

nshapes--;

};

double x, y;

void move(double dx, double dy);

virtual double area(void) = 0;

virtual double perimeter(void) = 0;

static int nshapes;

};

class Circle : public Shape {

private:

double radius;

public:

Circle(double r) : radius(r) { };

virtual double area(void);

virtual double perimeter(void);

};

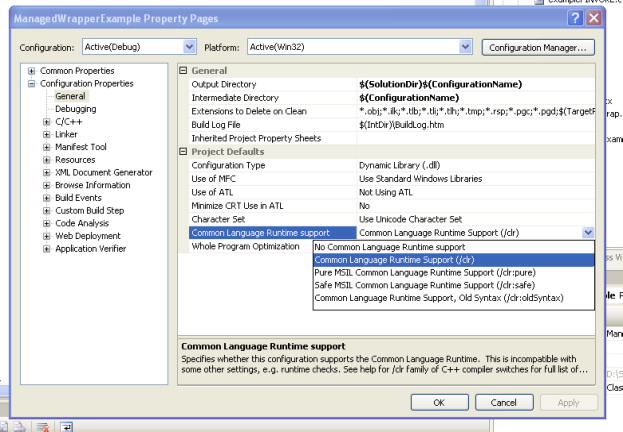

The first thing we can try, to expose our classes to .NET it to set the setting for managed compilation:

If your project compiles then you are just very close, and what you need is to add some managed classes to your C++ project to expose your native classes:

Let’s see the Shape class:

//We can use another namespace, to avoid name collition.

//In this way we can replicate the structure of our C++ classes.

namespace exposedToNET

{

//Shape is an abstract class so the better thing

// to do is to generate an interface

public interface class Shape : IDisposable

{

public:

//public variables must be exposed as properties

property double x

{

double get();

void set(double value);

}

property double y

{

double get();

void set(double value);

}

//method do not expose any problems

void move(double dx, double dy);

double area();

double perimeter();

//public static variables must

static property int nshapes;

};

//Static methods or variables of abstract class are added here

public ref class Shape_Methods

{

//public static variables must be exposed as static properties

public:

static property int nshapes

{

int get()

{

return ::Shape::nshapes;

}

void set(int value)

{

::Shape::nshapes = value;

}

}

};

}

And for the Circle class we will have something like this:

namespace exposedToNET

{

public ref class Circle : Shape

{

private:

::Circle* c;

public:

Circle(double radius)

{

c = new ::Circle(radius);

}

~Circle()

{

delete c;

}

//public variables must be exposed as properties

property double x

{

virtual double get()

{

return c->x;

}

virtual void set(double value)

{

c->x = value;

}

}

property double y

{

virtual double get()

{

return c->y;

}

virtual void set(double value)

{

c->y = value;

}

}

//method do not expose any problems

virtual void move(double dx, double dy)

{

return c->move(dx,dy);

}

virtual double area()

{

return c->area();

}

virtual double perimeter()

{

return c->perimeter();

}

//public static variables must be exposed as static properties

static property int nshapes

{

int get()

{

return ::Shape::nshapes;

}

void set(int value)

{

::Shape::nshapes = value;

}

}

};

}

DOWNLOAD EXAMPLE CODE

SWIG

SWIG is a software development tool that connects programs written in C and C++ with a variety of high-level programming languages.

This is a great tool used for several languages like Python, Perl, Ruby, Scheme, and even in different platforms.

The exposure mechanism used in this scheme is platform invoke, the issues here are similar to those of COM because there is some marshaling going on. This scheme might be more efficient than the COM one but I haven’t really test it to be completely sure that it is better.

I have reviewed the SWIG code and it might also be possible to modify its code to generate wrappers using managed C++, but this is an interesting exercise that I have to leave for my readers. Sorry I just don’t have enough time.

But how is SWIG used?

In SWIG what you do is that you add a .i file to your project. This file provides directives for some code generation that specify exactly what you want to expose and how.

This can very helpful if you just want to expose some methods.

If you are lazy like me you can just add something like:

/* File : example.i */

%module example

%{

#include "example.h" ß you put here includes with the definitions for your classes

%}

/* Let's just grab the original header file here */

%include "example.h" ß add alse the include here

And SWIG will add a file like example_wrap.cxx that you have to compile with the rest of your C++ code.

It will also generate a set of C# classes that you use in your C# application, so it seams to your program that all the code is just C#.

SWIG is a great tool and has been testing in a lot of platforms.

We recently did some quick tests on the results of some projects that we just finished migrating to compare the binary size and memory footprint of the resulting migrated .NET application and the original Visual Basic 6.0 application. Here is a brief summary of the results.

Binary Size

We have seen that binary sizes remain very similar or decrease by a small margin when compared to the original Visual Basic 6.0 binaries. There is a small amount of application re-factoring that contributes to this reduction, though, such as consolidating all shared files to a common library (instead of including the same files in several VB6 projects, which increases the code base and binary size).

You do have to take into account the space required by the .NET Framework itself, that varies from 280MB to 610MB.

Memory Footprint

Our observations on the memory footprint of migrated .NET applications, when compared to the original VB6 applications, is consistent to what we've seen with the binaries' size. .NET applications have higher initial memory consumption, since the .NET Framework sets up the stack and heap space at startup, and you have to add the memory required by the JIT compiler. Even with these constraints, a quick revision of applications that we've migrated for customers shows that the memory consumption is, on average, around 10% less than in VB6 (these are no scientific measurements, just based on monitoring memory consumption during the execution of test cases).

We have also seen that .NET normally maintains a more consistent memory usage pattern, while VB6 applications has more peaks where the memory consumption goes up, then back down. This is caused by the .NET Framework holding on to resources until the Garbage Collector runs, and the overall improvements in memory management included in the .NET Framework.

.NET applications have a memory overhead associated with the .NET Framework itself. This is more noticeable in small applications, but overall, is a tradeoff required for running on a managed environment. According to the .NET System Requirements, the Framework requires at least 96MB (256MB recommended) of RAM to run. In our experience, however, you should have at least 512MB (1GB recommended) of RAM to run migrated applications comfortably (on Windows XP).

The performance of a migrated .NET application, when compared to the original VB6 application, is normally very similar or better. The only instances when we have seen a performance decrease is when doing an important re-architecture or when the database engine is changed (from Access to Oracle, for example). Every once in a while we also run into issues with the Garbage Collector, but fortunately they are not that common and are easy to detect.

One thing to keep in mind is the way .NET loads assemblies, and how they are executed. .NET assemblies are compiled to an intermediate language, called CIL (Common Intermediate Language, formerly MSIL). When these assemblies are executed, the default behavior for the .NET Framework is to use a Just-In-Time compiler, which compiles the CIL code for a method to native code "on the fly" the first time the method is called. This implies an overhead on this first call, which suffers from a (normally acceptable) performance impact while the JIT compiler runs. Once a method is compiled, though, it is kept in memory, so the performance of subsequent calls is not affected. Once the code is in memory the performance of the .NET application is normally better than the performance of the VB6 application. You can find more information on JIT here.

It is worth mentioning that you can run ngen.exe on the application to compile the CIL assemblies to native code. This may improve application startup and first run times. Here's an interesting take on whether to ngen or not to ngen.

Check out this performance optimization book in MSDN, called Improving .NET Application Performance and Scalability. It is slightly outdated, but the concepts still apply and are useful to improve the performance of migrated applications.

Today we published a new White Paper, Planning a Successful VB to .NET Migration: 8 Proven Tips. In it, we share some tips on things that you should be aware of when migrating your applications.

This is the first in a series of White Papers we will be releasing in the next months. The idea is to share the knowledge we have accumulated over the years performing Visual Basic 6.0 migrations to the .NET Framework. We have been involved with Microsoft in this type of engagements since the very beginning of the platform, and faced lots of issues in the process. This has shaped our current methodology, which, even though is still improving, has proven itself with solid results (a you can read in the recently released case studies). Hopefully you will find them very useful when faced with a migration task at your organization.

If you are familiar with VB to .NET migration projects, you

certainly know by now that this is not a trivial task. And with the state of

the economy today, saving up on scarce, valuable resources is a must. That’s

when an automated software migration solution proves to be the most viable

approach, constituting the most cost-effective, non-disruptive method of application

renewal.

I recently read an excellent article in ASP.NET PRO magazine, where Alvin Bruney offered some

insight on the challenges of migrating VB6 applications, providing some

estimation on the overall effort. For starters, he accurately notes how a large

part of the cost in these projects is related to the QA process, something

we’ve definitely seen in large, complex enterprise application upgrades, as it

usually represents around 50% of the total time.

He then provides some numbers regarding the cost for VB to

.NET migration projects: $1/LOC for simple applications, $3-$7/LOC for large

enterprise systems, and $10-$15/LOC for the more complex ones. However, this

varies a lot from one project to another, depending not only on the complexity

of the application and target requirements, but also on the quality of the tools

and the skills available for the migration. For example, due to a proven

methodology, consultants with broad experience in VB to .NET migration projects

and powerful conversion tools, a turn-key solution delivered by ArtinSoft,

taking care of the complete migration up to functional equivalence in the

target language, is generally between $1-$2 per line of source code. This includes the Supplier Testing activities,

though not the User Acceptance Testing, where the customer finally certifies functional

equivalence through predefined test cases. And of course there are other

post-migration costs involved, like those related to the new application’s deployment

and enhancement, but I think it is safe to say that the cost per line of code

for the migration itself, on a turn-key basis, is rarely higher than $3.

Moreover, when

time to market is a critical factor, this automated migration solution just

can’t be beat. For example, a recent customer estimated that rewriting from

scratch his highly complex, business critical, 100,000+ LOC VB6 application

would take him up to 2.5 years, while using ArtinSoft’s comprehensive solution

allowed him to release the C# version in less than 6 months. And using only

about 1/17th of the resources required for a rewrite. Expect the

case study soon, but trust me: we’re not talking n00bies here ;-) And another

example I mentioned on my last post

described how a recent customer

cut down the project time in 1 year, representing savings of about $160,000.

On the other hand, calculating how much it will cost for someone who licenses

our Visual Basic Upgrade Companion to perform the migration in-house is more complicated,

since it depends greatly on his dexterity. But just to provide another example

of how much our solution reduces the effort, another customer with a 550,000

LOC application recently told us he managed to save 14 man/months by using ArtinSoft’s tool

internally, instead of the Upgrade Wizard that ships with Microsoft’s Visual

Studio.

In any case, as Bruney wrote on the aforementioned article “automation

is the key to containing cost”. But watch out for conversion tools that will

only cause you to waste your time and money. Some of our customers have tried

some of these options in parallel before choosing our tool, but a few were

lured instantly by the deceivingly low prices. Most of the latter have come to

us in the end, frustrated with the poor results.

By the way, the article says that “the migration tool takes

you to VB.NET only”. I assume the author is talking about the Upgrade Wizard,

since even a couple of the tools I referred to above convert to C#, but the Visual Basic Upgrade Companion is

the only one that allows migrating effectively to both VB.NET AND C#. Finally,

if you have settled for C# as the target language, I should warn you again

about the infamous double-jump approach, that is, converting from VB6 to VB.NET

and then to C# (the author mentions this option, though he doesn’t exactly

recommends it). We’ve seen a couple of customers who tried that and found it

really problematic, to say the least. In fact, they finally decided it was a

whole lot easier starting all over from VB6 and using our tool to move to C#

directly.

As I mention in a post last week, we recently released version 2.2 of the Visual Basic Upgrade Companion. The previous version, 2.1, added some new things, but focused mostly on "under the covers" improvements, and fixing several issues reported with version 2.0. However, for this release, we do have several exciting new features that should make migrations from Visual Basic 6.0 go much smoother. Among these, we can mention:

Custom Mappings

The Visual Basic Upgrade Companion enables the user to define customized transformations for the upgrade process execution. This technique allows to implement coverage for non-supported legacy components and to enhance and fine-tune the existing support. I already covered Custom Maps on this post and you can read more about this on the Custom Maps page.

Data access - new flavors available

The Visual Basic Upgrade Companion converts the data access model on your VB6 application (ADO, DAO, RDO) to ADO.NET, using the either SQLClient data provider or the classes defined in the System.Data.Common namespace. Using the latter will allow your migrated application to connect with most major .NET database providers. Version 2.2 added support for the automated migration of DAO and RDO to ADO.NET, and greatly improved the migration of ADO to ADO.NET. More information here.

Naming conventions refactoring

This feature lets the end-user migrate his Visual Basic 6 code to VB.NET or C# with standard Naming Conventions. This feature is a compound of common naming conventions for .NET languages, and use standard coding practices for C# and VB.NET. You can find more information on this and the next feature in this page.

Renaming mechanism

The renaming feature changes the name of an identifier and all of its references in order to avoid conflicts with another name. Some of the conflicts solved by the VBUC are:

- Keywords: The VBUC must rename the names that are the same as keywords from Visual Basic .NET and C#. Moreover, the VBUC should take into account the target language (Visual Basic.NET or C#) to recognize the keywords that apply for each case.

- Case sensitive issues (C#): Visual Basic 6 is a case insensitive language, but C# is not. The VBUC must correct the name references used with different cases to the case used in the declaration.

- Scope conflict: This is necessary when a Type declaration element has the same name as the type declaration. If this case is detected the element declaration must be renamed along with the references to this type element.

- Conflicts with .NET classes: This section applies for Forms and UserControls, mainly, because they could declare some member names that are part of the corresponding class in .NET (in this case System.Windows.Forms.Form & System.Windows.Forms.UserControl). These members must be renamed in order to avoid any conflict.

User Controls and Custom Properties

In Visual Basic 6.0 user controls expose their programmer-defined properties in the property list on the designer window. These user properties can be configured to be displayed in a specific category and based on these settings. The Visual Basic Upgrade Companion can determine the most appropriate type and settings for the resulting properties to have functional equivalence with the original VB6 user property. I plan on elaborating on this feature in a future blog post.

Most people migrating their application want to move ahead and take advantage of new technologies and new operating systems.

So if you had a VB6 application and you migrated it with us to .NET we will recommend and automate the process to use ADO.NET.

Why?

You can still use ODBC but i will list some compelling reasons:

* There a very fast ADO.NET drivers available. Using ODBC implies addind an interop overhead that can affect performance.

* Some vendors do not support and/or certify the use of ODBC drivers for .NET. So in those cases if you use ODBC your are on your own.

During my consulting experience I have seen several problems using ODBC drivers ranging from just poor performance, problems with some SQL statements, stored procedures calls, database specific features or complete system inestability.

* and also problems running in 64-bit.

This last one is very concerning. If you made all the effort to migrate an application to .NET and run it on for example on a Windows 2003 64 bit server it wont be able to use your 32-bit ODBC drivers unless you go to the the Build tab, and set Platform Target to "x86".

This is very sad because your application cannot take advantage of all the 64 bit resources.

If you are lucky enough you might find a 64 bit version of your ODBC driver but I will really recommend going straigth to 64-bit and use ADO.NET. And that's exactly what we can really help you to do specially in our version 2.2 of the VBUC.

After several months of hard work, we are proud to announce the release of version 2.2 of the Visual Basic Upgrade Companion. This version includes significant enhancements to the tool, including:

- Custom Maps: You can now define custom transformations for libraries that have somewhat similar interfaces. This should significantly speed up your migration projects if you are using third party controls that have a native .NET version or if you are already developing in .NET and wish to map methods from your VB6 code to your .NET code.

- Legacy VB6 Data Access Models: for version 2.2 we now support the transformation of ADO, RDO and DAO to ADO.NET. This data access migration is implemented using the classes and interfaces from the System.Data.Common namespace, so you should be able to connect to any database using any ADO.NET data provider.

- Support for additional third party libraries: We have enhanced the support for third party libraries, for which we both extended the coverage of the libraries we already supported and added additional libraries. The complete list can be found here.

- Plus hundreds of bug fixes and code generation improvements based on the feedback from our clients and partners!

You can get more information on the tool on the Visual Basic Upgrade Companion web page. You can also read about our migration services, which have helped many companies to successfully take advantage of their current investments in VB6 by moving their applications to the .NET Framework in record time!

Do you remember this classic bit, a pretty bad joke from the end of the movie "Coming to America":

"A man goes into a restaurant, and he sits down, he's having a bowl of soup and he says to the waiter: "Waiter come taste the soup."

Waiter says: Is something wrong with the soup?

He says: Taste the soup.

Waiter says: Is there something wrong with the soup? Is the soup too hot?

He says: Will you taste the soup?

Waiter says: What's wrong, is the soup too cold?

He says: Will you just taste the soup?!

Waiter says: Alright, I'll taste the soup - where's the spoon??

Aha. Aha! ..."

Well, the thing is that this week, when I read this column over at Visual Studio Magazine, this line of dialog was the first thing that came into my mind. First of all, we have the soup: according to the Support Statement for Visual Basic 6.0 on Windows®Vista™and Windows®Server 2008™, the Visual Basic 6.0 runtime support files will be supported until at least 2018 (Windows Server 2008 came out in 27 February 2008):

Supported Runtime Files – Shipping in the OS: Key Visual Basic 6.0 runtime files, used in the majority of application scenarios, are shipping in and supported for the lifetime of Windows Vista or Windows Server 2008. This lifetime is five years of mainstream support and five years of extended support from the time that Windows Vista or Windows Server 2008 ships. These files have been tested for compatibility as part of our testing of Visual Basic 6.0 applications running on Windows Vista.

Then, we have the spoon, taken from the same page:

The Visual Basic 6.0 IDE

The Visual Basic 6.0 IDE will be supported on Windows Vista and Windows Server 2008 as part of the Visual Basic 6.0 Extended Support policy until April 8, 2008. Both the Windows and Visual Basic teams tested the Visual Basic 6.0 IDE on Windows Vista and Windows Server 2008. This announcement does not change the support policy for the IDE.

So, even though you will be able to continue using your Visual Basic 6.0 applications, sooner or later you will need to either fix an issue found in one of them, or add new functionality that is required by your business. And when that day comes, you will face the harsh reality that the VB6.0 IDE is no longer supported. Even worst so, you have to jump through hoops in order to get it running. If you add the fact that we are probably going to see Windows 7 ship sooner rather than later, the prospect of being able to run your application but not fix it or enhance it in a supported platform becomes a real possibility. So make sure that you plan for a migration to the .NET Framework ahead of time - you don't want to hear anybody telling you "Aha. Aha!" if this were to happen.

I really never expected to be witness of the kind of events like those ones we are seeing nowadays. Certainly, we are experiencing a huge impact on our conception of society mainly driven by the adjustment of the economic system; probably an era is ending, a new one is about to born I cannot possibly know that for sure. I just hope for a better one.

Normally, I am a pessimistic character; and more than ever we have reasons for being so. However in this post, I want to be a little less than usually, but with prudence. I just needed to reorder my thoughts for mental health: I have been seeing so much C++ and Javascript and the like code during the last time.

I am neither economist nor sociologist, nothing even closer, just an IT old regular guy, needless to say. I just want to reflect here, quite informally, about the IT model and more exclusively the software model and its role in our society, under a context as the one we are currently experimenting. What is a "software model" by the way? I mean just here (by overloading): in a society we have state, political, economical and legal models, among others. Good or bad, less recognized as such or not, I think we also have invented a software model which at some important extent orchestrates the other historically better known models, let us call them (more) natural models.

I am remembering the 90s as the new millennium approached and many conjectures were made about the Y2K problem, especially concerning legacy software systems, and its potentially devastating costs and negative effects. For an instance, from here, let us take some quotes (it is worthy following the related articles pointed to):

"People have been sounding the alarm about the costs of the millennium bug--the software glitch that could paralyze computers come Jan. 1, 2000--for a couple of years. Now, the hard numbers are coming in and, if the pattern holds, they point to an even larger bill than many feared just a few months ago.

[...]

Outspoken Y2K-watcher Edward E. Yardeni, chief economist at Deutsche Bank Securities Inc., says the numbers show that some organizations are ''just starting'' to wake up to Y2K's potential for damage--but he believes the possible impact is enormous. In fact, Yardeni puts the chances of a recession in 2000 or 2001 at 70% because of ''a glitch in the flow of information"

Suddenly after reading that again, the old fashioned term "software crisis" (attributed to Bauer in 1968, popularized by Dijkstra seminal work in the seventies) we taught at college rooms seemed to make more sense than ever. But romanticism aside, we in IT know, software is still quite problematic for the same reasons since then. It is now a matter of size. In Dijkstra own words almost forty years ago (Humble Programmer ):

"To put it quite bluntly: as long as there were no machines, programming was no problem at all; when we had a few weak computers, programming became a mild problem, and now we have gigantic computers, programming has become an equally gigantic problem."

But those were surely different times; weren’t they? Exaggerated (as a source of businesses or even religious opportunities) or not, however, Y2K also gave us a serious (and global) warning about to what extent software had grown in many parts of our normal society model even in a time when the Web was not wired into the global business model as we have lived in the last decades, apparently.

I do not know whether final Y2K costs were as big as or even bigger than predicted but certainly the problem gave a huge impulse to investment in the software branch, I would guess. I am afraid, not always, leading to an improvement of the software model and practices. Those were the golden times that are probably ending now when appearance was frequently more valued than content and Artificial Intelligence became a movie.

What will be going on with the software model if the underlying economic model is now adjusting so dramatically and together with them the other more natural models, too? Will be short of capital for investment and consume stop or change (again) for worst software model evolution and development?

I do believe our software model remains essentially as bad as it was in the Y2K epoch. It is a consequence of its own abstract character living in a more and more "concrete" business world. I would further guess, it is even worst now for it was highly proliferated, it got more complicated structurally. Maintenance and formally understanding are now harder, among others, because dynamism, lack of standardization and because external functionality has tended to be more valued than maintainability and soundness. And exactly for that reason, I also believe (no matter how exactly the new economic model is going to look like) software will have to be stronger structurally and more reliable because after economic stabilization and restart, whenever, businesses will turn more strict to avoid just appearance once again be able to generate wealth.

I would expect (wish) software for effectively manipulating software (legacy and fresher, dynamic spaghetti included) should be more than ever demanded as a consequence. More effective testing and dynamic maintenance will be required during any adjustment phases of the new economical model. I also see standardization as a natural requirement and driver of a higher level of software quality. Platform independence will be more important than ever, I guess. No matter what agents become winners during adjustment and after recovering of the economic system, a better software model will be critical for each one of them, globally. Software as an expression of substantial knowledge will be in any case considered an asset under any circumstances. I think, we should be seeing a less speculative and consistent economical model where precisely a better software model really will make a discriminator for competing with real substance not with just fancy emptiness. The statement must be demonstrable.

Sometimes things do not result as bad as they appeared. Sometimes they result being even worst and, now, we do have to be prepared for such a scenario, absolutely. But, I also want to believe, it can also be an opportunity out there. I would like to think a software model improvement will be an essential piece of the economic transition and a transition to something better in software will be taking place, at the end. I wish it, at least.

I recommend reading Dijkstra again especially on these days just as an interesting historical comparison; and trying honestly not fooling myself, I quote him referring to his vision of a better software model, as we called here:

"There seem to be three major conditions that must be fulfilled. The world at large must recognize the need for the change; secondly the economic need for it must be sufficiently strong; and, thirdly, the change must be technically feasible."

I think, we might be having the first two of them. Concerning the third one, and slowly returning to the C++ code I am seeing, I just reshape his words: I absolutely fail to see how I could keep programs firmly within my intellectual grip, when this programming language escapes my intellectual control. But we have to, exactly.

So you now have a license of the Visual Basic Upgrade Companion, you open it up, and you don't know what now to do. Well, here are 5 simple steps you can follow to get the most out of the migration:

-

Read the "Getting Started" Guide: This is of course a no brainer, but well, we are all developers, and as such, we only read the manual when we reach a dead end ;). The Getting Started guide is installed alongside the Visual Basic Upgrade Companion, and you can launch it form the same program group in the start menu:

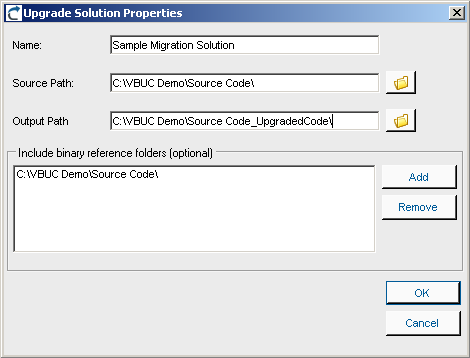

- Create a New Upgrade Solution: the first step for every migration will be to create a new VBUC Migration Solution. In it, you need to select the directory that holds your sources, select where you want the VBUC to generate the output .NET code, and the location of the binaries generated and use by the application. This last bit of information is very important, since the VBUC extracts information from the binaries in order to resolve the references between projects and create a VS.NET solution complete with references between the projects:

- Select the Target Language: One of the most significant advantages of using the Visual Basic Upgrade Companion is that it allows you to generate Visual Basic.NET or C# code directly. This can be selected using the combo box in the Upgrade Manager's UI:

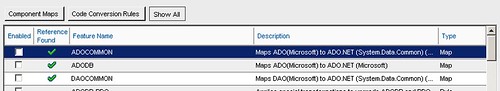

- Create a Migration Profile: The Visual Basic Upgrade Companion gives a large degree of control to the end users on how the .NET code will be generated. In addition to the option of creating either C# or VB.NET code, there are also a large amount of transformations that can be turned on/off using the profile manager. Starting with version 2.2, the Profile Manager recognizes the components used in the application and adds a green checkmark to the features that apply transformations to those components. This simplifies profile creation and improves the quality of the generated code from the start:

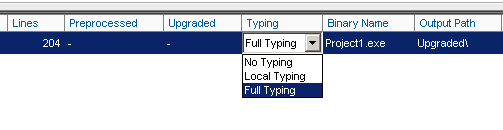

- Select the Code Typing Mechanism: the VBUC is able to determine the data type for variables in the VB6.0 code that are either declared without type or as Variant. To do this, for each project included in the migration solution you can select three levels of typing: Full Typing, Local Typing or No Typing. Full Typing is the most accurate typing mechanism - it infers the type for the variables depending on how they are used throughout the project. This means that it needs to analyze the complete source code in order to make typing decisions, which slows the migration process and has higher memory consumption. Local Typing also infers data types but only within the current scope of the variable. And No Typing leaves the variables with the same data types from the VB6.0 code:

After going through these 5 steps, you will have an Upgrade Solution ready to go that will generate close to optimal code. The next step is just to hit the "Start" button and wait for the migration to finish:

A few days ago we posted some new case studies to our site. These case studies highlight the positive impression that the capabilities of the Visual Basic Upgrade Companion leave on our customers when we do Visual Basic 6.0 to C# migrations.

The first couple of them deal with a UK company called Vertex. We did two migration projects with them, one for a web-based application, and another one for a desktop application. Vertex had a very clear idea of how they wanted the migrated code to look like. We added custom rules to the VBUC in order to meet their highly technical requirements, so the VBUC would do most of the work and speed up the process. Click on the links to read the case studies for their Omiga application or for the Supervisor application.

The other one deals with a Texas-based company called HSI. By going with us they managed to move all their Visual Basic 6.0 code (including their data access and charting components) to native .NET code. They estimate that by using the Visual Basic Upgrade companion they saved about a year in development time and a lot of money. You can read the case study here.

As profiled in a previous post we are building a tool for reasoning about CSS and related HTML styling tools using some logic framework (logic grammars in human readable style are usually highly ambiguous, consequently an interesting case study). So, we need a textual language, a DSL, for the tool, I was asked to try MGrammar (simply Mg), which a DSL generator which is part of the Microsoft Oslo SDK, recently presented in MS PDP.

Actually I am unaware of the Oslo project details , so I was a little bit skeptical about the thing and because it would entail learning another new language (M). But I fight against any personal preconception and decided just to take a look and try to have fun with it. I do not pretend here to blog about the whole Oslo project, of course. Just to tell a simple story about using Mg in its current state, no more.

In general, I like very much the idea that data specification (models) and data storing, and querying and the like can be separated from procedural languages in a declarative and yet more expressive way; hopefully, my own way to express my model (beyond standard diagrams and graphic perspectives). Oslo and M approach such the general vision on model oriented software development, Mg the latter on expressivity, as I understand the source material which is not much yet.

What I have seen so far of M is indeed very interesting, I really liked it; it extends LINQ which I consider a very interesting proposal MS’s; it also reminded me OCL in some ways. In fact, there exists an open source OCL library called Oslo, which has nothing to do with MS; it is just funny to mention.

Mg

I focused on tasting Mg; we do not consider M at all. We do not present a tutorial here, there is one nice for instance here. If you are interested in my example and sources please mail me and I will send you it back.

The SDK also contains a nice demo for a musical language (Song grammar) and its player in C#; and moreover M and Mg themselves are specified in Mg, the corresponding sources are available in the SDK. Hence, you learn by reading grammars, because the documentation is not yet as rich as I would like.

Mg source files resemble, at least in principle, other parser generators (like ANTLR, Coco, JavaCC, YACC/Lex and relatives). I do not know details about the parsing technique behind Mg. However, according to the mg.exe reporting it would be LR-parsing.

Mg offers really powerful features. Just as an instance, following are tokenizing rules:

token Digit = "0".."9";

token Digits = Digit+;

token Sign = ("+"|"-");

token Number = Sign?Digits ("." Digits)?;

Or constraint the repetition factor (from at least 1 maximum 10) as in:

token ANotTooLongNumber = Digit#1..10;

But you may have parameters in scanner rules, thus you may write (taken from nice tutorial here):

token QuotedText(Q) = Q (^('\n' | '\r') - Q)* Q;

token SingleQuotedText = QuotedText('"');

token DoubleQuotedText = QuotedText("'");

For handling different types of quotations depending on parameter Q! Notice the subpattern ^('\n' | '\r') – Q. it means any but newline or return excluding character Q.

Oslo SDK provides an editor called Intellipad, which can be used for both M and Mg development. You need to experiment a little bit before getting used with it, but, after short time, it appears to you as a very nice tool. Actually, a command-line compiler for Mg is included in the SDK, so you may edit your grammar using any text editor and compile using a shell. But, the Intellipad is quite powerful, it allows editing and debugging your grammar simultaneously, quickly; Intellipad shows you several panes for working with, in one you write your grammar, in other you may enter input to your grammar which is immediately checked against your rules. A third (tree view) pane shows you the AST being projected by your grammar on the input you are providing.

Finally, a forth pane shows you the error messages that includes those eventual parsing ambiguities according to the given input and grammar. Interesting, only then, you notice any potential conflict. I did not see any form of static analysis for warning about such cases provided in the editor. Is there any such?

Besides that, actually I enjoyed using it. I a not sure to appreciate the whole functionality but it was quite easy to create my grammar. Mg is modular, as M is. So you can organize and reuse your grammar parts. That is very nice.

To start defining a language, you write something like:

language LogicFormulae{

//rules go here inside

}

For defining the language you have "token", "syntax" mainly and other statements. In this case, the name "LogicFormulae" will be used later on when we access the corresponding parser programmatically.

Case Study

Back to our case study, our simple language contains logic formulae like "p&(p->q) <-> p&q" (called well-formed-formulae, or wff) where as usual a lot of ambiguity (conflicts) might occur. The result is frequently that you have to rewrite your grammar into a usually uglier one in order to recover determinism and so fun is out. What I liked of Mg is a very readable style for handling such cases. For instance, I have a rule like

syntax ComposedWff =

precedence 1: ParenthesisWff

| precedence 1: PossibilityWff

| precedence 1: NecessityWff

| precedence 1: QuantifiedWff

| precedence 2: NotWff

| precedence 3: AndWff

| precedence 4: ImpliesWff

| precedence 4: EquivWff

| precedence 5: OrWff

;

Using the keyword precedence I can "reorder" the rule to avoid ambiguity. Thus, "all x.p & q" parses as "(all x.p) & q" under this rule because of the precedence I chose.

Another example shows you how to deal with associativity and operator precedence

syntax AndWff = Wff left(4) "&" Wff;

syntax ImpliesWff = Wff right(3) "->" Wff;

Mg projects syntax in its D-Graphs using the name of the rule and token images automatically, thus it always generate an AST without specifying it which is very useful. Thus, the "AndWff" rule will produce a tree with three children (including the token "&"), labeled with "AndWff". You can use your own constructors to be produced, instead:

syntax AndWff = x:Wff left(4) "&" y:Wff => And[x, y];

Such constructors like "And" need not to be classes they will be labels in nodes.

Conflicts

Conflicts were in appearance solved by this way because the engine behind Intellipad did not complain anymore about my test cases. I do not know whether there is a way to verify the grammar using Intellipad, so I just assumed it was conflicts free. However, when I compiled it using the mg.exe (using the option -reportConflicts) I got a list of warnings as by any LR parser generator. That was disappointing. Are we seeing different parsing engines?

Using C#

The generated parser and the graphs it produces can be accessed programmatically, something that was of my special interest in my case beyond working with Intellipad. Because I was not able to create a parser image directly from Intellipad, I did the following: I compiled my grammar using the mg.exe command producing a so-called image, a binary file (other targets are possible, I think) called "mgx". This mgx-image can be loaded in C# project using some libraries of the SDK. (It can also be executed using mgx.exe). You would load the image like this:

parser = MGrammarCompiler.LoadParserFromMgx(stream, languageName);

where "stream" would be a stream referring to the mgx file I produced with the mg compiler. And "languageName" is a string indicating the language I want to use for parsing ("LogicFormulae" in my case). And I parse any file as indicated by string variable "input" as follows

rootNode = parser.ParseObject(input, ErrorReporter.Standard);

The variable rootNode (of type object, by the way!) refers to root of the parsing graph. A special kind of object of class GraphBuilder gives you access to the nodes, node labels and node children if any. Hence, using such an object you visit the graph as usual. For instance using a pattern like:

void VisitAst(GraphBuilder builder, object node){

foreach (object childNode in builder.GetSequenceElements(node))

if (childNode is string)

VisitValue(childNode);

else VisitAst(builder, childNode);

}

For some reason, the library uses object as node type, as you are noticing, probably.

Conclusions

I found Mg very powerful and working with Intellipad for prototyping was actually fun. Well it was almost always. I only have some doubts concerning use and performance. For instance, using Intellipad you are able to develop and test very fast. But it seems that there is no static analysis tool inside Intellipad for warning about eventual conflicts in the grammar.

The other unclear thing I saw is the loading time of the engine in C#. It takes really too long before you get the object parser given the mgx file. In fact, the mg compiler seems to work too slowly, in particular with respect to Intellipad. It seems as though the mg.exe and Intellipad were not connected as tool chain or something like that. But surely is just me, because it is just my first contact with this SDK.

In Visual Basic 6.0 you can specify optional parameters in a function or sub signature. This, however, isn't possible in .NET. In order to migrate code that uses this optional parameters, the Visual Basic Upgrade Companion creates different overload methods with all the possible combinations present in the method's signature. The following example will further explain this.

Take this declaration in VB6:

Public Function OptionSub(Optional ByVal param1 As String, Optional ByVal param2 As Integer, Optional ByVal param3 As String) As String

OptionSub = param1 + param2 + param3

End Function

In the declaration, you see that you can call the function with 0, 1, 2 or 3 parameters. When running the code through the VBUC, you will get four different methods, with the different overload combinations, as shown below:

static public string OptionSub( string param1, int param2, string param3)

{

return (Double.Parse(param1) + param2 + Double.Parse(param3)).ToString();

}

static public string OptionSub( string param1, int param2)

{

return OptionSub(param1, param2, "");

}

static public string OptionSub( string param1)

{

return OptionSub(param1, 0, "");

}

static public string OptionSub()

{

return OptionSub("", 0, "");

}

The C# code above doesn't have any change applied to it after it comes out of the VBUC. There may be other ways around this problem, such as using parameter arrays in C#, but that would be more complex in scenarios like when mixing different data types (as seen above).

Right now we are entering the final stages of testing for the release of the next version of the Visual Basic Upgrade Companion (VBUC). This release is scheduled for sometime within the next month.

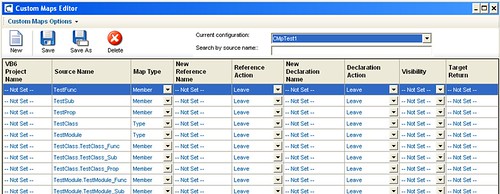

One of the most exiting new features of this new release is the addition of Custom Maps. Custom Maps are simple translation rules that can be added to the VBUC so they are applied during the automated migration. This allows end users to fine tune specific mappings so that they better suit a particular application. Or you can actually create mappings for third party COM controls that you use, as long as the APIs for the source and target controls are similar.

The VBUC will include an interface for you to edit these maps:

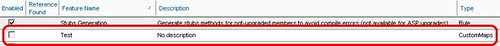

Also, once you create the map, you can select them using the Profile Editor:

Custom Maps add a great deal of flexibility to the tool. Even though only simple mappings are allowed (simple as in one-to-one mappings, currently it doesn't allow you to add new code before or after the mapped element), this feature allows end users edit, modify or delete any transformation included, and to add your own. By doing this, you can have the VBUC do more work in an automated fashion, freeing up developer's time and speeding up the migration process even more.