While working on a migration project recently, we found a very particular behavior of the TabIndex property when migrating from Visual Basic 6.0 to .NET. It is as follows:

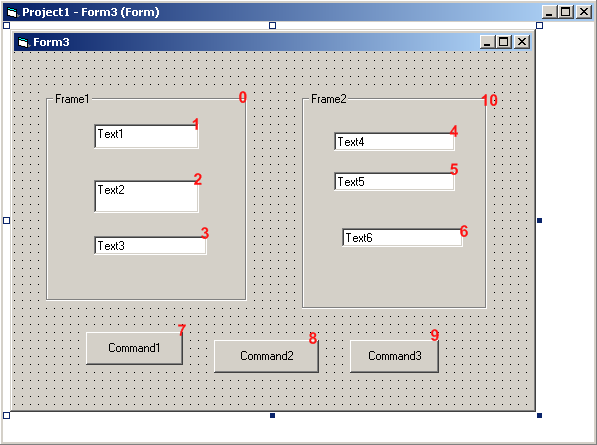

In VB6, we have the following form: (TabIndexes in Red)

Note that TabIndex 0 and 10 correspond to the Frames Frame1 and Frame2. If you stand on the textbox Text1, and start pressing the tab key, it will go through all the controls in the following order (based on the TabIndex): 1->2->3->4->5->6->-7>8->9.

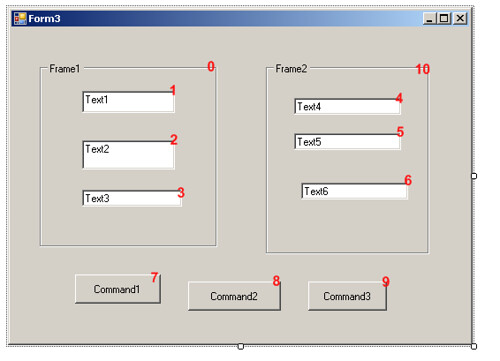

After the migration, we have the same form but in .NET. We still keep the same TabIndex for the components, as shown below:

In this case TabIndex 0 and 10 correspond to the GroupBox Frame1 and Frame2. When going through the control in .NET, however, if you start pressing the tab key, it will use the following order: 1->2->3->7>8->9->4->5->6. As you can see, it first goes through the buttons (7, 8 and 9) instead of going through the textboxes. This requires an incredibly easy fix (just changing the TabIndex on the GroupBox) to replicate the behavior from VB6, but I thought it would be interesting to throw this one out there. This is one of the scenarios where there is not much that the VBUC can do (it is setting the properties correctly on the migration). It is just a difference in behavior between VB6 and .NET for which a manual change IS necessary.

Today

Eric Nelson covered the

quasi-legendary legacy transformation options graph on his blog.

Taking into account the 4 basic alternatives for legacy renovation, that is, Replace, Rewrite, Reuse or Migrate,

this diagram shows the combination of 2 main factors that might lead to these

options: Application Quality and Business Value. As Declan Good

mentioned in his “Legacy

Transformation” white paper, Application Quality refers to “the suitability

of the legacy application in business and technical terms”, based on parameters

like effectiveness, functionality, stability of the embedded business rules,

stage in the development life cycle, etc. On the other hand, Business Value is

related to the level of customization, that is, if it’s a unique, non-standard

system or if there are suitable replacement packages available.

This

diagram represents the basic decision criteria, but there are other issues that

must be considered, specifically when evaluating VB to .NET upgrades. For

example, as Eric mentions in his blog post, a lot of manual rewrite projects

face so many problems that end up being abandoned. One of ArtinSoft’s recent

customers, HSI,

went for the automated migration approach after analyzing the implications of a

rewrite from scratch. They just couldn’t afford the time, cost and disruption involved. As Ryan Grady, owner of the

company in charge of this VB to .NET migration project for HSI puts it, “very quickly we realized that upgrading the

application gave us the ability to have something already and then just improve

each part of it as we moved forward. Without question, we would still be working

on it if we’d done it ourselves, saving us up to 12 months of development time

easily”. Those 12 months translated into a US$160,000 saving for HSI! (You

can read the complete case

study at ArtinSoft’s website.)

On

the other hand, for some companies reusing (i.e. wrapping) their VB6

applications to run on the .NET platform is simply not an option, no matter

where it falls in the aforementioned chart. For example, there are strict regulations in the Financial and

Insurance verticals that deem keeping critical applications in an environment

that’s no longer officially supported simply unacceptable. Besides, sometimes

there’s another drawback to this alternative: it adds more elements to be

maintained, two sets of data to be kept synchronized and requires for the

programmers to switch constantly between 2 different development environments.

Therefore,

an assessment of a software portfolio before deciding on a legacy transformation

method must take into account several factors that are particular to each case,

like available resources, budget, time to market, compliance with regulations,

and of course, the specific goals you want to achieve through this application

modernization project.

During migratio of a simple project, we found an interesting migration details.

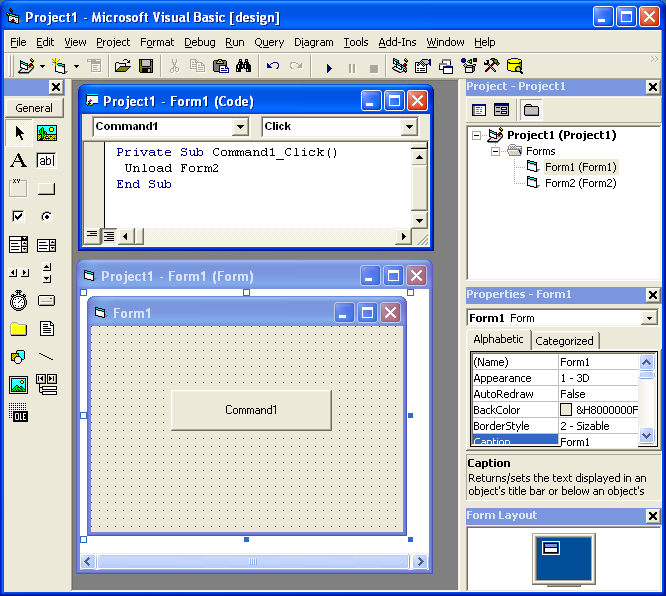

The solution has a project with two Forms. Form1 and Form2. Form1 has a command button and in the Click for that command button it performs a code like UnLoad Form2.

But it could happen that Form2 has not been loaded but in VB6 it is not a problem. In .NET the code will be something like form2.Close() and it could cause problems.

A possible fix is to add some flag that indicates if the form was instanciated and the call the event.

Recenlty following a post in an AS400 Java Group, someone asked about a method for signing and verifying a file with PGP.

I though, "Damn, that seems like a very common thing, it shouldn't be that difficult", and started google'ing for it.

I found as the poster said that the Bouncy Castle API can be used but it was not easy.

Well so I learned a lot about PGP and the Bouncy Castle and thanks god, Tamas Perlaky posted a great sample that signs a file, so I didn't have to spend a lot of time trying to figure it out.

I'm copying Tamas Post because, I had problems accesing the site so here is the post just as Tamas published it:

"To build this you will need to obtain the following depenencies. The Bouncy Castle versions may need to be different based on your JDK level.

bcpg-jdk15-141.jar

bcprov-jdk15-141.jar

commons-cli-1.1.jar

Then you can try something like:

java net.tamas.bcpg.DecryptAndVerifyFile -d test2_secret.asc -p secret -v test1_pub.asc -i test.txt.asc -o verify.txt

And expect to get a verify.txt that's the same as test.txt. Maybe.

Here’s the download: PgpDecryptAndVerify.zip"

And this is the original link: http://www.tamas.net/Home/PGP_Samples.html

Thanks a lot Tamas

I had to access an old VSS database and nobody remember any password or the admin password.

The tool from this page http://not42.com/2005/06/16/visual-source-safe-admin-password-reset

This was a lifesaver!

If you have heavy processes in Coldfusion a nice thing is to track them with the JVMSTAT tool.

You can get the JVMStat tool from http://java.sun.com/performance/jvmstat/

And this post shows some useful information of how to use the tool with Coldfusion

http://www.petefreitag.com/item/141.cfm

Just more details about scripting

Using the MS Scripting Object

The MS Scripting Object can be used in .NET applications. But it has several limitations.

The main limitation it has is that all scripted objects must be exposed thru pure COM. The scripting object is a COM component that know nothing about .NET

In general you could do something like the following to expose a component thru COM:

[System.Runtime.InteropServices.ComVisible(true)]

public partial class frmTestVBScript : Form

{

//Rest of code

}

NOTE: you can use that code to do a simple exposure of the form to COM Interop. However to provide a full exposure of a graphical component like a form or user control you should use the Interop Form ToolKit from Microsoft http://msdn.microsoft.com/en-us/vbasic/bb419144.aspx

To expose an object in COM. But most of the properties and methods in a System.Windows.Forms.Form class, use native types instead of COM types.

As you could see in the Backcolor property example:

public int MyBackColor

{ get { return System.Drawing.ColorTranslator.ToOle(this.BackColor); } set { this.BackColor = System.Drawing.ColorTranslator.FromOle(value); }}

Issues:

- The problem with properties such as those is that System.Drawing.Color is not COM exposable.

- Your script will expect an object exposing COM-compatible properties.

- Another problem with that is that there might be some name collision.

Using Forms

In general to use your scripts without a lot of modification to your scripts you should do something like this:

- Your forms must mimic the interfaces exposed by VB6 forms. To do that you can use a tool like OLE2View and take a look at the interfaces in VB6.OLB

- Using those interfaces create an interface in C#

- Make your forms implement that interface.

- If your customers have forms that they expose thru com then if those forms add new functionality do this:

- Create a new interface, that extends the basic one you have and

I’m attaching an application showing how to to this.

Performing a CreateObject and Connecting to the Database

The CreateObject command can still be used. To allow compatibility the .NET components must expose the same ProgIds that the used.

ADODB can still be used, and probably RDO and ADO (these last two I haven’t tried a lot)

So I tried a simple script like the following to illustrate this:

Sub ConnectToDB

'declare the variable that will hold new connection object Dim Connection

'create an ADO connection object Set Connection=CreateObject("ADODB.Connection")

'declare the variable that will hold the connection stringDim ConnectionString

'define connection string, specify database driver and location of the database ConnectionString = "Driver={SQL Server};Server=MROJAS\SQLEXPRESS;Database=database1;TrustedConnection=YES"'open the connection to the database

Connection.Open ConnectionString

MsgBox "Success Connect. Now lets try to get data"

'declare the variable that will hold our new object Dim Recordset

'create an ADO recordset object

Set Recordset=CreateObject("ADODB.Recordset")

'declare the variable that will hold the SQL statement Dim SQL

SQL="SELECT * FROM Employees"

'Open the recordset object executing the SQL statement and return records

Recordset.Open SQL, Connection

'first of all determine whether there are any records

If Recordset.EOF Then

MsgBox "No records returned."

Else

'if there are records then loop through the fields Do While NOT Recordset.Eof

MsgBox Recordset("EmployeeName") & " -- " & Recordset("Salary")

Recordset.MoveNext

Loop

End If MsgBox "This is the END!"

End Sub

|

I tested this code with the sample application I’m attaching. Just paste the code, press Add Code, then type ConnectToDB and executeStatement

I’m attaching an application showing how to do this. Look at extended form. Your users will have to make their forms extend the VBForm interface to expose their methods.

Using Events

Event handling has some issues.

All events have to be renamed (at least this is my current experience, I have to investigate further, but the .NET support for COM Events does a binding with the class names I think there’s a workaround for this but I still have not the time to test it).

In general you must create an interface with all events, rename then (in my sample I just renamed them to <Event>2) and then you can use this events.

You must also add handlers for .NET events to raise the COM events.

#region "Events"

public delegate void Click2EventHandler();

public delegate void DblClick2EventHandler();

public delegate void GotFocus2EventHandler();

public event Click2EventHandler Click2;

public event DblClick2EventHandler DblClick2;

public event GotFocus2EventHandler GotFocus2;

public void HookEvents()

{

this.Click += new EventHandler(SimpleForm_Click);

this.DoubleClick += new EventHandler(SimpleForm_DoubleClick);

this.GotFocus += new EventHandler(SimpleForm_GotFocus);

}

void SimpleForm_Click(object sender, EventArgs e)

{

if (this.Click2 != null)

{

try

{

Click2();

}

catch { }

}

}

void SimpleForm_DoubleClick(object sender, EventArgs e)

{

if (this.DblClick2 != null)

{

try

{

DblClick2();

}

catch { }

}

}

void SimpleForm_GotFocus(object sender, EventArgs e)

{

if (this.GotFocus2 != null)

{

try

{

GotFocus2();

}

catch { }

}

}

#endregion Alternative solutions

Sadly there isn’t currently a nice solution for scripting in .NET. Some people have done some work to implement something like VBScript in .NET (including myself as a personal project but not mature enough I would like your feedback there to know if you will be interesting in a managed version of VBScript) but currently the most mature solution I have seen is Script.NET. This implementation is a true interpreter. http://www.codeplex.com/scriptdotnet Also microsoft is working in a DLR (Dynamic Languages Runtime, this is the runtime that I’m using for my pet project of VBScript)

The problem with some of the other solutions is that they allow you to use a .NET language like CSharp or VB.NET or Jscript.NET and compile it. But the problem with that is that this process generates a new assembly that is then loaded in the running application domain of the .NET Virtual machine. Once an assembly is loaded it cannot be unloaded. So if you compile and load a lot of script you will consume your memory. There are some solutions for this memory consumption issues but they require other changes to your code.

Using other alternatives (unless you used a .NET implementation of VBScript which currently there isn’t a mature one) will require updating all your user scripts. Most of the new scripts are variants of the Javascript language.

Migration tools for VBScript

No. There aren’t a lot of tools for this task. But you can use http://slingfive.com/pages/code/scriptConverter/

Download the code from: http://blogs.artinsoft.net/public_img/ScriptingIssues.zip

I saw this with Francisco and this is one possible solution:

ASP Source

rs.Save Response, adPersistXML

rs is an ADODB.RecordSet variable, and its result is being written to the ASP Response

Wrong Migration

rs.Save(Response <-- The ASP.NET Response is not COM, ADODB.Recordset is a COM object, ADODB.PersistFormatEnum.adPersistXML);

So we cannot write directly to the ASP.NET response. We need a COM Stream object

Solution

ADODB.Stream s = new ADODB.Stream();

rs.Save(s, ADODB.PersistFormatEnum.adPersistXML);

Response.Write(s.ReadText(-1));

In this example an ADODB.Stream object is created, data is written into it and the it is flushed to the ASP.NET response

As you might know, recently Artinsoft released Aggiorno a smart tool for fixing pages for improving page structure and enforcing compliance with web standards in a friendly and unobtrusive way. Some remarks on the tool capabilities can be seen here. Some days ago I had the opportunity to use part of the framework on which the tool is built-in. Such a framework will be available in a forthcoming version to allow users developing extensions of the tool for user specific needs. Such needs may be variety of course; in our case we are interested in analyzing style sheets (CSS) and style affecting directives in order to help to predict and explain potential differences among browsers. The idea is to include a feature of such a nature in a future version of the tool. We notice that this intended tool though similar should be quite smarter than existing and quite nice tools like Firebug with respect to CSS understanding. Our idea was to implement it using the Aggiorno components; we are still working on it but it has been so far very easy to get a rich model of a page and its styles for our purposes. Our intention is to use a special form of temporal reasoning for explaining potentially conflictive behaviors at the CSS level. Using the framework we are able to build an automaton (actually a transition system) modeling the CSS behavior (intended and actual one). We incorporate dynamic (rendered, browser dependant) information in addition to the static one provided by the styles and the page under analysis. Dynamic information is available trough Aggiorno´s framework which is naturally quite nice to have it. Just to give you a first impression of the potential we show you a simple artificial example on how we model the styling information under our logic approach here. Our initial feelings on the potential of such a framework are quite encouraging, so we hope very soon this framework become public for similar or better developments according to own needs.

In VB6 you could create an OutOfProcess instance to execute some actions. But there is not a direct equivalent for that. However you can run a class in an another application domain to produce a similar effect that can be helpful in a couple of scenarios.

This example consists of two projects. One is a console application, and the other is a Class Library that holds a Class that we want to run like an "OutOfProcess" instance. In this scenario. The console application does not necessary know the type of the object before hand. This technique can be used for example for a Plugin or Addin implementation.

Code for Console Application

using System;

using System.Text;

using System.IO;

using System.Reflection;

namespace OutOfProcess

{

/// <summary>

/// This example shows how to create an object in an

/// OutOfProcess similar way.

/// In VB6 you were able to create an ActiveX-EXE, so you could create

/// objects that execute in their own process space.

/// In some scenarios this can be achieved in .NET by creating

/// instances that run in their own

/// 'ApplicationDomain'.

/// This simple class shows how to do that.

/// Disclaimer: This is a quick and dirty implementation.

/// The idea is get some comments about it.

/// </summary>

class Program

{

delegate void ReportFunction(String message);

class RemoteTextWriter : TextWriter

{

ReportFunction report;

public RemoteTextWriter(ReportFunction report)

{

this.report = report;

}

public override Encoding Encoding

{

get

{

return new UnicodeEncoding(false, false);

}

}

public override void Flush()

{

//Nothing to do here

}

public override void Write(char value)

{

//ignore

}

public override void Write(string value)

{

report(value);

}

public override void WriteLine(string value)

{

report(value);

}

//This is very important. Specially if you have a long running process

// Remoting has a concept called Lifetime Management.

//This method makes your remoting objects Inmmortals

public override object InitializeLifetimeService()

{

return null;

}

}

static void ReportOut(String message)

{

Console.WriteLine("[stdout] " + message);

}

static void ReportError(String message)

{

ConsoleColor oldColor = Console.ForegroundColor;

Console.ForegroundColor = ConsoleColor.Red;

Console.WriteLine("[stderr] " + message);

Console.ForegroundColor = oldColor;

}

static void ExecuteAsOutOfProcess(String assemblyFilePath,String typeName)

{

RemoteTextWriter outWriter = new RemoteTextWriter(ReportOut);

RemoteTextWriter errorWriter = new RemoteTextWriter(ReportError);

//<-- This is my path, change it for your app

//Type superProcessType = AspUpgradeAssembly.GetType("OutOfProcessClass.SuperProcess");

AppDomain outofProcessDomain =

AppDomain.CreateDomain("outofprocess_test1",

AppDomain.CurrentDomain.Evidence,

AppDomain.CurrentDomain.BaseDirectory,

AppDomain.CurrentDomain.RelativeSearchPath,

AppDomain.CurrentDomain.ShadowCopyFiles);

//When the invoke member is called this event must return the assembly

AppDomain.CurrentDomain.AssemblyResolve += new ResolveEventHandler(outofProcessDomain_AssemblyResolve);

Object outofProcessObject =

outofProcessDomain.CreateInstanceFromAndUnwrap(

assemblyFilePath, typeName);

assemblyPath = assemblyFilePath;

outofProcessObject.

GetType().InvokeMember("SetOut",

BindingFlags.Public | BindingFlags.Instance | BindingFlags.InvokeMethod,

null, outofProcessObject, new object[] { outWriter });

outofProcessObject.

GetType().InvokeMember("SetError",

BindingFlags.Public | BindingFlags.Instance | BindingFlags.InvokeMethod,

null, outofProcessObject, new object[] { errorWriter });

outofProcessObject.

GetType().InvokeMember("Execute",

BindingFlags.Public | BindingFlags.Instance | BindingFlags.InvokeMethod,

null, outofProcessObject, null);

Console.ReadLine();

}

static void Main(string[] args)

{

string testAssemblyPath =

@"B:\OutOfProcess\OutOfProcess\OutOfProcessClasss\bin\Debug\OutOfProcessClasss.dll";

ExecuteAsOutOfProcess(testAssemblyPath, "OutOfProcessClass.SuperProcess");

}

static String assemblyPath = "";

static Assembly outofProcessDomain_AssemblyResolve(object sender, ResolveEventArgs args)

{

try

{

//We must load it to have the metadata and do reflection

return Assembly.LoadFrom(assemblyPath);

}

catch

{

return null;

}

}

}

}

Code for OutOfProcess Class

using System;

using System.Collections.Generic;

using System.Text;

namespace OutOfProcessClass

{

public class SuperProcess : MarshalByRefObject

{

public void SetOut(System.IO.TextWriter newOut)

{

Console.SetOut(newOut);

}

public void SetError(System.IO.TextWriter newError)

{

Console.SetError(newError);

}

public void Execute()

{

for (int i = 1; i < 5000; i++)

{

Console.WriteLine("running running running ");

if (i%100 == 0) Console.Error.Write("an error happened");

}

}

}

}

We found some machines that do not show the "Attach To Process" option.

This is very important for us, specially if you are testing the migration of an VB6 ActiveX EXE or ActiveX DLL to C#.

There is a bug reported by Microsoft http://support.microsoft.com/kb/929664

Just follow the Tool/Import Settings wizard to the end. Close and restart VS and the options will reapper.

Also you might find that the Configuration Manager is not available to switch between Release and Build for example.

To fix this problem just go to Tools -> Options -> Projects and Solutions -> General... make sure the option "Show advanced build configurations" is checked.

Have you ever wished to modify the way Visual Studio imported a COM Class. Well finally you can.

The Managed, Native, and COM Interop Team (wow what a name). It looks like the name of that goverment office in the Ironman movie.

Well this fine group of men, have release the source code of the TLBIMP tool. I'm more that happy for this.

I can know finally get why are some things imported the way they are.

http://www.codeplex.com/clrinterop

You can dowload also the P/Invoke assistant. This assistant has a library of signatures so you can invoke any Windows API.

The WebBrowser control for .NET is just a wrapper for the IE ActiveX control. However this wrapper does not expose all the events that the IE ActiveX control exposes.

For example the ActiveX control has a NewWindow2 that you can use to intercept when a new window is gonna be created and you can even use the ppDisp variable to give a pointer to an IE ActiveX instance where you want the new window to be displayed.

So, our solution was to extend the WebBrowser control to make some of those events public.

In general the solution is the following:

- Create a new Class for your Event that extend any of the basic EventArgs classes.

- Add constructors and property accessor to the class

- Look at the IE Activex info and add the DWebBrowserEvents2 and IWebBrowser2 COM interfaces. We need them to make our hooks.

- Create a WebBrowserExtendedEvents extending System.Runtime.InteropServices.StandardOleMarshalObject and DWebBrowserEvents2. We need this class to intercept the ActiveX events. Add methos for all the events that you want to intercept.

- Extend the WebBrowser control overriding the CreateSink and DetachSink methods, here is where the WebBrowserExtendedEvents class is used to make the conneciton.

- Add EventHandler for all the events.

And thats all.Here is the code. Just add it to a file like ExtendedWebBrowser.cs

using System;

using System.Windows.Forms;

using System.ComponentModel;

using System.Runtime.InteropServices;

//First define a new EventArgs class to contain the newly exposed data

public class NewWindow2EventArgs : CancelEventArgs

{

object ppDisp;

public object PPDisp

{

get { return ppDisp; }

set { ppDisp = value; }

}

public NewWindow2EventArgs(ref object ppDisp, ref bool cancel)

: base()

{

this.ppDisp = ppDisp;

this.Cancel = cancel;

}

}

public class DocumentCompleteEventArgs : EventArgs

{

private object ppDisp;

private object url;

public object PPDisp

{

get { return ppDisp; }

set { ppDisp = value; }

}

public object Url

{

get { return url; }

set { url = value; }

}

public DocumentCompleteEventArgs(object ppDisp,object url)

{

this.ppDisp = ppDisp;

this.url = url;

}

}

public class CommandStateChangeEventArgs : EventArgs

{

private long command;

private bool enable;

public CommandStateChangeEventArgs(long command, ref bool enable)

{

this.command = command;

this.enable = enable;

}

public long Command

{

get { return command; }

set { command = value; }

}

public bool Enable

{

get { return enable; }

set { enable = value; }

}

}

//Extend the WebBrowser control

public class ExtendedWebBrowser : WebBrowser

{

AxHost.ConnectionPointCookie cookie;

WebBrowserExtendedEvents events;

//This method will be called to give you a chance to create your own event sink

protected override void CreateSink()

{

//MAKE SURE TO CALL THE BASE or the normal events won't fire

base.CreateSink();

events = new WebBrowserExtendedEvents(this);

cookie = new AxHost.ConnectionPointCookie(this.ActiveXInstance, events, typeof(DWebBrowserEvents2));

}

public object Application

{

get

{

IWebBrowser2 axWebBrowser = this.ActiveXInstance as IWebBrowser2;

if (axWebBrowser != null)

{

return axWebBrowser.Application;

}

else

return null;

}

}

protected override void DetachSink()

{

if (null != cookie)

{

cookie.Disconnect();

cookie = null;

}

base.DetachSink();

}

//This new event will fire for the NewWindow2

public event EventHandler<NewWindow2EventArgs> NewWindow2;

protected void OnNewWindow2(ref object ppDisp, ref bool cancel)

{

EventHandler<NewWindow2EventArgs> h = NewWindow2;

NewWindow2EventArgs args = new NewWindow2EventArgs(ref ppDisp, ref cancel);

if (null != h)

{

h(this, args);

}

//Pass the cancellation chosen back out to the events

//Pass the ppDisp chosen back out to the events

cancel = args.Cancel;

ppDisp = args.PPDisp;

}

//This new event will fire for the DocumentComplete

public event EventHandler<DocumentCompleteEventArgs> DocumentComplete;

protected void OnDocumentComplete(object ppDisp, object url)

{

EventHandler<DocumentCompleteEventArgs> h = DocumentComplete;

DocumentCompleteEventArgs args = new DocumentCompleteEventArgs( ppDisp, url);

if (null != h)

{

h(this, args);

}

//Pass the ppDisp chosen back out to the events

ppDisp = args.PPDisp;

//I think url is readonly

}

//This new event will fire for the DocumentComplete

public event EventHandler<CommandStateChangeEventArgs> CommandStateChange;

protected void OnCommandStateChange(long command, ref bool enable)

{

EventHandler<CommandStateChangeEventArgs> h = CommandStateChange;

CommandStateChangeEventArgs args = new CommandStateChangeEventArgs(command, ref enable);

if (null != h)

{

h(this, args);

}

}

//This class will capture events from the WebBrowser

public class WebBrowserExtendedEvents : System.Runtime.InteropServices.StandardOleMarshalObject, DWebBrowserEvents2

{

ExtendedWebBrowser _Browser;

public WebBrowserExtendedEvents(ExtendedWebBrowser browser)

{ _Browser = browser; }

//Implement whichever events you wish

public void NewWindow2(ref object pDisp, ref bool cancel)

{

_Browser.OnNewWindow2(ref pDisp, ref cancel);

}

//Implement whichever events you wish

public void DocumentComplete(object pDisp,ref object url)

{

_Browser.OnDocumentComplete( pDisp, url);

}

//Implement whichever events you wish

public void CommandStateChange(long command, bool enable)

{

_Browser.OnCommandStateChange( command, ref enable);

}

}

[ComImport, Guid("34A715A0-6587-11D0-924A-0020AFC7AC4D"), InterfaceType(ComInterfaceType.InterfaceIsIDispatch), TypeLibType(TypeLibTypeFlags.FHidden)]

public interface DWebBrowserEvents2

{

[DispId(0x69)]

void CommandStateChange([In] long command, [In] bool enable);

[DispId(0x103)]

void DocumentComplete([In, MarshalAs(UnmanagedType.IDispatch)] object pDisp, [In] ref object URL);

[DispId(0xfb)]

void NewWindow2([In, Out, MarshalAs(UnmanagedType.IDispatch)] ref object pDisp, [In, Out] ref bool cancel);

}

[ComImport, Guid("D30C1661-CDAF-11d0-8A3E-00C04FC9E26E"), TypeLibType(TypeLibTypeFlags.FOleAutomation | TypeLibTypeFlags.FDual | TypeLibTypeFlags.FHidden)]

public interface IWebBrowser2

{

[DispId(100)]

void GoBack();

[DispId(0x65)]

void GoForward();

[DispId(0x66)]

void GoHome();

[DispId(0x67)]

void GoSearch();

[DispId(0x68)]

void Navigate([In] string Url, [In] ref object flags, [In] ref object targetFrameName, [In] ref object postData, [In] ref object headers);

[DispId(-550)]

void Refresh();

[DispId(0x69)]

void Refresh2([In] ref object level);

[DispId(0x6a)]

void Stop();

[DispId(200)]

object Application { [return: MarshalAs(UnmanagedType.IDispatch)] get; }

[DispId(0xc9)]

object Parent { [return: MarshalAs(UnmanagedType.IDispatch)] get; }

[DispId(0xca)]

object Container { [return: MarshalAs(UnmanagedType.IDispatch)] get; }

[DispId(0xcb)]

object Document { [return: MarshalAs(UnmanagedType.IDispatch)] get; }

[DispId(0xcc)]

bool TopLevelContainer { get; }

[DispId(0xcd)]

string Type { get; }

[DispId(0xce)]

int Left { get; set; }

[DispId(0xcf)]

int Top { get; set; }

[DispId(0xd0)]

int Width { get; set; }

[DispId(0xd1)]

int Height { get; set; }

[DispId(210)]

string LocationName { get; }

[DispId(0xd3)]

string LocationURL { get; }

[DispId(0xd4)]

bool Busy { get; }

[DispId(300)]

void Quit();

[DispId(0x12d)]

void ClientToWindow(out int pcx, out int pcy);

[DispId(0x12e)]

void PutProperty([In] string property, [In] object vtValue);

[DispId(0x12f)]

object GetProperty([In] string property);

[DispId(0)]

string Name { get; }

[DispId(-515)]

int HWND { get; }

[DispId(400)]

string FullName { get; }

[DispId(0x191)]

string Path { get; }

[DispId(0x192)]

bool Visible { get; set; }

[DispId(0x193)]

bool StatusBar { get; set; }

[DispId(0x194)]

string StatusText { get; set; }

[DispId(0x195)]

int ToolBar { get; set; }

[DispId(0x196)]

bool MenuBar { get; set; }

[DispId(0x197)]

bool FullScreen { get; set; }

[DispId(500)]

void Navigate2([In] ref object URL, [In] ref object flags, [In] ref object targetFrameName, [In] ref object postData, [In] ref object headers);

[DispId(0x1f7)]

void ShowBrowserBar([In] ref object pvaClsid, [In] ref object pvarShow, [In] ref object pvarSize);

[DispId(-525)]

WebBrowserReadyState ReadyState { get; }

[DispId(550)]

bool Offline { get; set; }

[DispId(0x227)]

bool Silent { get; set; }

[DispId(0x228)]

bool RegisterAsBrowser { get; set; }

[DispId(0x229)]

bool RegisterAsDropTarget { get; set; }

[DispId(0x22a)]

bool TheaterMode { get; set; }

[DispId(0x22b)]

bool AddressBar { get; set; }

[DispId(0x22c)]

bool Resizable { get; set; }

}

}

ArtinSoft’s top seller product, the Visual Basic Upgrade Companion is daily improved by the Product Department to satisfy the requirements of the currently executed migration projects . The project driven research methodology allows our company to deliver custom solutions to our customers needs, and more importantly, to enhance our products capabilities with all the research done for this purposes.

Our company’s largest customer engaged our consulting department requesting for a customization over the VBUC to generate specific naming patterns in the resulting source code. To be more specific, the resulting source code must comply with some specific naming code standards plus a mappings customization for a 3rd party control (FarPoint’s FPSpread).

This request pushed ArtinSoft to re-architect the VBUC's renaming engine, which was capable at the moment, to rename user declarations in some scenarios (.NET reserved keywords, collisions and more).

The re-architecture consisted in a centralization of the renaming rules into a single-layered engine. Those rules was extracted from the Companion’s parser and mapping files and relocated into a renaming declaration library. The most important change is that the renaming engine now evaluates every declaration instead of only the conflictive ones. This enhanced renaming mechanism generates a new name for each conflictive declaration and returns the unchanged declaration otherwise.

The renaming engine can literally “filter” all the declarations and fix possible renaming issues. But the story is not finished here; thanks to our company’s proprietary language technology (Kablok) the renaming engine is completely extensible.

Jafet Welsh, from the product development department, is a member of the team who implemented the new renaming engine and the extensibility library, and he explained some details about this technology:

“…The extensibility library seamlessly integrates new rules (written in Kablok) into the renaming engine… we described a series of rules for classes, variables, properties and other user declarations to satisfy our customer's code standards using the renaming engine extensibility library… and we plan to add support for a rules-describing mechanism to allow the users to write renaming rules on their own…”

ArtinSoft incorporated the renaming engine for the VBUC version 2.1 and for version 2.2 the extensibility library will be completed.

In our previous post on parallel programming we mentioned some pointers to models on nested data parallelism (NDP) as offered in Intel’s Ct and Haskell derivatives in the form of a parallel list or array (Post).

We want to complement our set of references by mentioning just another quite interesting project by Microsoft, namely, the Parallel Language Integrated Query, PLINQ (J. Duffy) developed as an extension of LINQ. PLINQ is a component of FX. We base this post on the indicated reference.

We find this development particularly interesting given the potentially thin relation with pattern-matching (PM) that we might be exploiting in some particular schemas, as we suggested in our post; hence, it will be interesting to take that possibility into consideration for such a purpose. That would be the case if we eventually aim at PM based code on (massive) data sources (i.e. by means of rule-based programs).

As you might know LINQ offers uniformed and natural access to (CLR supported) heterogeneous data sources by means of an IEnumerable<T> interface that abstracts the result of the query. Query results are (lazily) consumable by means of iteration as in a foreach(T t in q) statement or directly by forcing it into other data forms. A set of query operators -main of them with a nice syntax support via extensions methods- are offered (from, where, join, etc) that can be considered phases on gathering and further filtering, joining, etc, input data sources.

For PLINQ, as we can notice, the path followed is essentially via a special data structure represented by the type IParallelEnumerable<T> (that extends IEnumerable<T>). For instance, in the following snippet model given by the author:

IEnumerable<T> data = ...;

var q = data.AsParallel().Where(x => p(x)).Orderby(x => k(x)).Select(x => f(x));

foreach (var e in q) a(e);

A call to extension method AsParallel is apparently the main requirement from the programmer point of view. But, in addition, three models of parallelism are available to in order to process query results: Pipelined, stop-and-go and inverted enumeration. Pipelined synchronizes query dedicated threads to (incrementally) feed the separated enumeration thread. This is normally the default behavior, for instance under the processing schema:

foreach (var e in q) {

a(e);

}

Stop-and-go joins enumeration so that it waits until query threads are done. In some cases this is chosen, when the query is completely forced.

Inverted enumeration uses a lambda expression provided by the user which to be applied to each element of the query. That avoids any synchronization issue but requires using the special PLINQ ForAll extension method not (yet?) covered by the sugared syntax. As indicated by the author, in the following form:

var q = ... some query ...;

q.ForAll(e => a(e));

As in other cases, however, side-effects must be avoided under this model, but this is just programmer responsibility.

If you have some .NET code that you want to share with VB6, COM has always been a nice option. You just add couple of ComVisible tags and that's all.

But...

Collections can be a little tricky.

This is a simple example of how to expose your Collections To VB6.

Here I create an ArrayList descendant that you can use to expose your collections.

Just create a new C# class library project and add the code below.

Remember to check the Register for ComInterop setting.

using System;

using System.Collections.Generic;

using System.Text;

using System.Runtime.InteropServices;

namespace CollectionsInterop

{

[Guid("0490E147-F2D2-4909-A4B8-3533D2F264D0")]

[ComVisible(true)]

public interface IMyCollectionInterface

{

int Add(object value);

void Clear();

bool Contains(object value);

int IndexOf(object value);

void Insert(int index, object value);

void Remove(object value);

void RemoveAt(int index);

[DispId(-4)]

System.Collections.IEnumerator GetEnumerator();

[DispId(0)]

[System.Runtime.CompilerServices.IndexerName("_Default")]

object this[int index]

{

get;

}

}

[ComVisible(true)]

[ClassInterface(ClassInterfaceType.None)]

[ComDefaultInterface(typeof(IMyCollectionInterface))]

[ProgId("CollectionsInterop.VB6InteropArrayList")]

public class VB6InteropArrayList : System.Collections.ArrayList, IMyCollectionInterface

{

#region IMyCollectionInterface Members

// COM friendly strong typed GetEnumerator

[DispId(-4)]

public System.Collections.IEnumerator GetEnumerator()

{

return base.GetEnumerator();

}

#endregion

}

/// <summary>

/// Simple object for example

/// </summary>

[ComVisible(true)]

[ClassInterface(ClassInterfaceType.AutoDual)]

[ProgId("CollectionsInterop.MyObject")]

public class MyObject

{

String value1 = "nulo";

public String Value1

{

get { return value1; }

set { value1 = value; }

}

String value2 = "nulo";

public String Value2

{

get { return value2; }

set { value2 = value; }

}

}

}

To test this code you can use this VB6 code. Remember to add a reference to this class library.

Private Sub Form_Load()

Dim simpleCollection As New CollectionsInterop.VB6InteropArrayList

Dim value As New CollectionsInterop.MyObject

value.Value1 = "Mi valor1"

value.Value2 = "Autre valeur"

simpleCollection.Add value

For Each c In simpleCollection

MsgBox value.Value1

Next

End Sub

Parallel programming has always been an interesting and recognized as a challenging area; it is again quite full of life due to the proliferation nowadays of parallel architectures available at the "regular" PC level such as multi-core processors, SIMD architectures as well as co-processors like stream processors as we have in (Graphical Processors Units (GPUs).

A key question derived from such a trend is naturally how parallel processors development would be influencing programming paradigms, languages and patterns in the near future. How programming languages should allow regular programmers to take real advantage of parallelism without adding another huge dimension of complexity to the already intricate software development process, without penalizing performance of software engineering tasks or application performance, portability and scalability.

Highly motivated by these questions, we want to present a modest overview in this post using our understanding of some interesting references and personal points of view.

General Notions

Some months ago someone working in Intel Costa Rica asked me about multi-core and programming languages during an informal interview, actually as a kind of test, which I probably did not pass, I am afraid to say. However, the question remains actually very interesting (and is surprisingly related to some of our previous posts on ADTs and program understanding via functional programming, FP). So, I want to try to give myself another chance and try to cover some part of the question in a humble fashion. More exactly, I just want to remind some nice well-known programming notions that are behind the way the matter has been attacked by industry initiatives.

For such a purpose, I have found a couple of interesting sources to build upon as well as recent events that exactly address the mentioned issue and serve us as a motivation. First, precisely Intel had announced last year the Ct programming model (Ghuloum et al) and we also have a report on the BSGP programming language developed by people of Microsoft Research (Hou et al) We refer to the sources for gathering more specific details about both developments. We do not claim these are the only ones, of course; we just use them as interesting examples.

Ct is actually an API over C/C++ (its name stands for C/C++ throughput) for portability and backward compatibility; it is intended for being used in multi-core and Tera-scale Intel architectures but practicable on more specific ones like GPUs, at least as a principle. On the other side BSGP (based on the BSP model of Valiant) addresses GPU programming mainly. The language has its own primitives (spawn, barrier, among the most important) but again looks like C at the usual language level. Thus, the surface does not reveal any pointer to FP, in appearance.

In the general case, the main concern with respect to parallel programming is clear: how can we program using a parallel language without compromising/constraining/deforming the algorithmic expression due to a particular architecture, at least not too much. What kind of uniform programming high-level concepts can be used that behave scalable, portable and even predictable in different parallel architectures? In a way that program structure can be derived, understood and maintained without enormous efforts and costs. In a way the compiler is still able to efficiently map them to the specific target, as much as possible.

Naturally, we already have general programming notions like threads, so parallel/concurrent programming usually get expressed using such concepts strongly supported by the multi-core architectures. Likewise, SIMD offers data-parallelism without demanding particular mind from the programmer. But data "locality" is in this case necessary in order to become effective. Such a requirement turns out not realistic in (so-called irregular) algorithms using dynamic data structures where data references (aliases and indirections) are quite normal (sparse matrices and trees for instance). Thus memory low-latency might result computationally useless in such circumstances, as well-known.

On the other hand, multithreading requires efficient (intra/inter-core) synchronization and a coherent inter-core memory communication. Process/task decomposition should minimize latency due to synchronization. Beside that data-driven decomposition is in general easier to grasp than task-driven. Thus, such notions of parallelism are still involved with specific low-level programming considerations to get a good sense of balance between multi-core and SPMD models and even MPMD.

Plenty of literature back to the eighties and the nineties already shows several interesting approaches in achieving data parallelism (DP) that take these mentioned issues into consideration, uniformly; thus, it is not surprising that Ct is driven by the notion of nested data parallelism (NDP). We notice that BSPG addresses GPU architectures (stream based) in order to build simpler programming model than, for instance, in CUDA. NDP is not explicitly supported but similar principles can be recognized, as we explain later on.

Interestingly, that NDP seminal works on these subject directly points to the paradigm of functional-programming (FP), specifically prototyped in the Nesl language of Blelloch and corresponding derivations based on Haskell (Nepal, by Chakravarty, Keller et al).

FP brings us, as you might realize, back to our ADTs, so my detour is not too far away from my usual biased stuff as I promised above. As Peyton Jones had predicted, FP will be more popular due to its intrinsic parallel nature. And even though developments like Ct and BSGP are not explicitly expressed as FP models (I am sorry to say), my basic understanding of the corresponding primitives and semantics was actually easier only when I was able to relocate it in its FP-ADT origin. Thus, to get an idea of what an addReduce or a pack of Ct mean, or similarly a reduce(op, x) of BSGP, was simpler by thinking in terms of FP. But this is naturally just me.

Parallelism Requirements and FP combinators

Parallel programs can directly indicate, using primitives or the like, where parallel task take place, where task synchronize. But it would be better that the intentional program structure gets not hidden by low-level parallel constraints. In fact, we have to assure that program control structure remains intentionally sequential (deterministic) because otherwise reasoning and predicting behavior can become hard. For instance, to reason about whether we add more processors (cores) we can guarantee that programs (essentially) performance scale. In addition, debugging is usually easier if programmer thinks sequentially during program development and testing.

Hence, if we want to preserve natural programming structures during coding and not be using special (essentially compiler targeting) statements or similar to specify parallel control, we have to write programs in a way that the compiler can derive parallelism opportunities from program structure. For such a purpose we would need to make use of some sort of regular programming structures, in other words, kind of patterns to formulate algorithms. That is exactly the goal the Ct development pursues.

As FP theory decade ago has shown that a family of so-called combinators does exist for expressing regular and frequent algorithmic solution patterns. Combinators are a kind of building-blocks for more general algorithms; they base on homomorphisms on (abstract) data types. Main members of such family are the map and reduce (aka. fold) combinators. They are part of a theory of lists or the famous Bird-Meertens formalism (BMF), as you might know. Symbolically, map can be defined as follows:

map(f, [x1,...,xn]) = [f(x1),..., f(xn)] (for n>= 0)

Where as usual brackets denote lists and f is an operation being applied to each element in a list collecting the results in a new list preserving the original order (map of an empty list is the empty list). As easy to see, many algorithms iterating over collections by this way can be an instance of map. My favorite example for my former FP students was: "to raise the final notes of every student in class in a 5%". But more serious algorithms of linear algebra are also quite related to the map-pattern, so they are ubiquitous in graphic or simulation applications (pointing to GPUs).

Map is naturally parallel; the n-applications of operation "f" could take place simultaneously in pure FP (no side-effects). Hence, expressing tasks using map allows parallelism. Moreover, map can be assimilated as a for-each iteration block able to run in parallel. List comprehensions in FP are another very natural form to realize map.

Nesl original approach which is followed by Nepal (a Haskell version for supporting NDP) and Ct is to provide a special data type (parallel list or array) that implicitly implements a map. In Nepal such lists are denoted by bracket-colon notation, [: :] and called parallel-arrays. In Ct such a type is provided by the API and is called TVEC. Simply using a data type for communicating parallelism to the compiler is quite simple to follow and does not obscure the proper algorithm structure. In Nepal notation, just as simple example, we would write using parallel array comprehensions something like this:

[: x*x | x <- [: 1, 2, 3 :] :]

This denotes the map of the square function over the parallel list [:1, 2, 3:]. Notice that this is very similar to the corresponding expression using regular lists. However, it is interesting to notice that in contrast to normal lists parallel-arrays are not inductively definable, that would suggest trying to process them sequentially, and that would make no sense. Using flat parallel arrays allows DP. However, and that is the key issue, parallel-arrays (TVECs also) can be nested, in such a case a flattening process takes places internally in order to apply in parallel a parallel function over a nested structure (a parallel-array of parallel-arrays) which is not possible in DP. Standard and illustrating examples of NDP are divide-and-conquer algorithms, like the quicksort (qsort). Using NDP lets the flattening handle recursive calls as parallel calls, too. For instance, as key part of a qsort algorithm we calculate:

[: qsort(x) | x <-[: lessThan, MoreThan :]:]

Assume the lessThan and moreThan are parallel arrays result of the previous splitting phase. In this case the flattening stage will "distribute" (replicate) the recursive calls between data structure. The final result after flattening will be a DP requiring a number of threads that depends on the number of array partitions (O(log(n))) not on the number of qsort-recursive calls (O(n)); which can help to balance the number of required threads at the end independently of the order of the original array. This example shows that NDP can avoid potential degeneration of control (task) based parallelism.

Evidently, not every algorithm is a map-instance; many of them are sequential reductions from a data type into a "simpler" one. For instance, we have the length of a list which reduces (collapses) a list into a number or similarly the sum of all elements of a list. In such a case the threads computing independent results must be synchronized into a combined result. This corresponds to the reduce class of operations. Reduce (associative fold) is symbolically defined as follows:

reduce (e, @, [x1, ..., xn]) = e @x1@...@xn

where "@" denotes here an associative infix operator (function of two arguments).

Reduce can be harder to realize in parallel in comparison to map due its own nature, in general; scans/tree-contraction techniques offer one option providing reduce as a primitive. Bottom-line is that they can be cleanly separated of big previous map-steps. In other words, many algorithms are naturally composed of "map"-phases followed by reduce-phases so particular parallelization and optimization techniques can be employed based on this separation at the compilation level.

We notice that map-steps can be matched with the superstep-notion which is very proper of the BSP model (which lies beneath the BSPG language). On the other hand reduce-phases are comparable with after-barrier code in this language or points where threads must combine their outputs. However, BSGP does not have flattening, as we could observe in the report.

If the implemented algorithm naturally splits into well-defines map (superstep)-reduce (barrier) phases then NDP-thinking promotes better threading performance and more readability of the code intentionality. In addition, many reduce operations can be very efficiently implemented as operations on arrays according to previously calculated indexing parallel arrays (as masks) which are directly supported in Ct (for instance, partition, orReduce, etc.) according to the source. In addition standard scan algorithms are supported in the library, thus scan-based combinations can be used.

In a forthcoming (in a hopefully shorter) second part of this post, we will be briefly discussing some applications of NDP to some pattern-matching schemas.

I am a firm believer in program understanding and in that our computer skills will allow us to develop programs that will understand programs and maybe in the future even write some of them :).

I also belive that natural languages ans programming languages are two things with a lot in common.

These are just some ideas about this subject.

"A language convertion translates one languate to another language, while a language-level upgrade moves an application from an older version of a language to a modern or more standardized version of that same language. In both cases, the goal is to improve portability and understanbility of an application and position that application for subsequent transformation", Legacy Systems, Transformation Strategies by William M. Ulrich.

An natural language convertion is exactly that. Translating one language to another language.

Natural language processing and transformation have a lot in common with automated source code migration. There is a lot of grammar studies on both areas, and a lot of common algorithms.

I keep quoting:

"Comparing artificial language and natural language it is very helpful to our understanding of semantics of programming languages since programming languages are artificial. We can see much similarity between these two kinds of languages:

Both of them must explain "given'" languages.

The goal of research on semantics of programming languages is the same as that of natural language: explanation of the meanings of given language. This is unavoidable for natural language but undesirable for programming language. The latter one has often led to post-design analysis of the semantics of programming languages wherein the syntax and informal meaning of the language is treated as given( such as PL/I, Fortran and C ). Then the research on the semantics is to understand the semantics of the language in another way or to sort out anomalies, ommisions, or defects in the given semantics-which hasn't had much impact on the language design. We have another kind of programming languages that have formal definitions, such as Pascal, Ada, SML. The given semantics allow this kind of programming language to be more robust than the previous ones.

Both of them separate "syntax'" and "semantics'".

Despite these similarities, the difference between the studies of natural and artificial language is profound. First of all, natural language existed for thousands of years, nobody knows who designed the language; but artificial languages are synthesized by logicians and computer scientists to meet some specific design criteria. Thus, `` the most basic characteritic of the distinction is the fact that an artificial language can be fully circumscribed and studied in its entirety.''

We already have developed a mature system for SYNTAX. In 1950's, linguist Chomsky first proposed formal language theory for English, thus came up with Formal Language Theory, Grammar, Regular Grammar, CFG etc. The ``first'' application of this theory was to define syntax for Algol and to build parser for it. The landmarks in the development of formal language theory are: Knuth's parser, and YACC-which is a successful and ``final''application of formal language theory.

"

from Cornell university http://www.cs.cornell.edu/info/projects/nuprl/cs611/fall94notes/cn2/cn2.html

Jing Huang

I also will like to add a reference from an interesting work related to pattern recognition a technique used both in natural language processing (see for example http://prhlt.iti.es/) and reverse engineering.

This work is from Francesca Arcelli and Claudia Raibulet from Italy and they are working with the NASA Automated Software EngineeringResearch Center

http://smallwiki.unibe.ch/woor2006/woor2006paper3/?action=MimeView

In VB6 it was very simple to add scripting capabilities to your application.

Just by using the Microsoft Script Control Library

You can still use this library in .NET just as Roy Osherove' Bloc show in

http://weblogs.asp.net/rosherove/articles/dotnetscripting.aspx

However there are some minor details that must be taken care of:

* Objects must be exposed thru COM (Add the [ComVisible(true)] attribute to the class

* Add the ComVisible(true) attribute to the AssemblyInfo file

* Make these objects public

* Recommended (put your calls to Eval or ExecuteStatement inside try-catch blocks).

And here's an example:

using System;

using System.Windows.Forms;

namespace ScriptingDotNetTest

{ [System.Runtime.InteropServices.ComVisible(true)]

public partial class frmTestVBScript : Form

{ public int MyBackColor

{ get { return System.Drawing.ColorTranslator.ToOle(this.BackColor); } set { this.BackColor = System.Drawing.ColorTranslator.FromOle(value); } }

MSScriptControl.ScriptControl sc = new MSScriptControl.ScriptControl();

private void RunScript(Object eventSender, EventArgs eventArgs)

{ try

{ sc.Language = "VbScript";

sc.Reset();

sc.AddObject("myform", this, true); sc.ExecuteStatement("myform.MyBackColor = vbRed"); }

catch

{ MSScriptControl.IScriptControl iscriptControl = sc as MSScriptControl.IScriptControl;

lblError.Text = "ERROR" + iscriptControl.Error.Description + " | Line of error: " + iscriptControl.Error.Line + " | Code error: " + iscriptControl.Error.Text;

}

}

[STAThread]

static void Main()

{ Application.Run(new frmTestVBScript());

}

}

}

TIP: If you don find the reference in the COM tab, just browse to c:\windows\system32\msscript.ocx

When people decide to migrate their VB6 applications they eventually end up questioning where they should go. Is VB.NET or C# a good choice?

I have my personal preference, but my emphasis is in developing the technology to take where YOU want to go.

VB.NET is a VB dialect very similar to VB6. It supports several constructs and it makes the changes easier.

C# has several differences from VB6, but it has it a growing language with lots of enthusiasts in its community.

Obviously migrating VB6 to VB dialect is a task far more easier than migrating to a different language.

However we are a research company with years of work in this area and challenges is just what we love.

Let's use a methaphor here.

My beautiful wife, was born in Moscow, Russia. Like her, I really enjoy reading a good book. Some of my favorite authors are

russian authors like Dostoievsky, Tolstoi and Chejov. However I still do not speak russian. I have tried, and I will keep trying but

I still don't know russian. I have read only translations of their books, and I really enjoy them.

As a native speaker my wife always tells me, that it is not the same to read those books in another language besides russian.

And they are phrases (specially in Chejov books that I might not completely understand) but I really got the author

message and enjoyed it.

Translating a book from russian to a more similar language like Ucranian is easier than translating it to English or Spanish.

But I think everybody agrees that is a task than can be done.

You can use terrible works case scenarios, but these scenarios must be analized.

Let see (I took these example from the link in that Francesco put in my previous post http://blogs.artinsoft.net/mrojas/archive/2008/08/07/vb-migration-not-for-the-weak-of-mind.aspx)

If you have code like this:

Sub CopyFiles(ByVal throwIfError As Boolean)

If Not throwIfError Then On Error Resume Next

Dim fso As New FileSystemObject

fso.CopyFile "sourcefile1", "destfile1"

fso.CopyFile "sourcefile2", "destfile2"

fso.CopyFile "sourcefile3", "destfile3"

' seven more CopyFile method calls …

End Sub

and you translate it to:

void CopyFiles(bool throwIfError)

{

Scripting.FileSystemObject fso = new Scripting.FileSystemObjectClass();

try

{

fso.CopyFile("sourcefile1", "destfile1", true);

}

catch

{

if (throwIfError)

{

throw;

}

}

try

{

fso.CopyFile("sourcefile1", "destfile1", true);

}

catch

{

if (throwIfError)

{

throw;

}

}

try

{

fso.CopyFile("sourcefile1", "destfile1", true);

}

catch

{

if (throwIfError)

{

throw;

}

}

// seven more try-catch blocks

}

I think that the russian is really keep in this translation.

First of all. When you do a translation, you should try to make it as native as possible. So why will you keep using a COM function when there is an

equivalent in .NET. So why not use System.IO.File.CopyFile("sourcefile1", "destfile1", true); instead?

Second of all. The On Error Resume Next, I agree is a not a natural statement in C#. I really think that using it could provide results that are less predictable.

Why? Becuase after executing it, are you sure that all the CopyFile occurred successfully? I would prefer wrapping the whole code inside a try-catch instead of trying

to provide an implementation that is not natural in C#, will Aspect Oriented programming provide a clean solution for this cases. Maybe?

RPG and COBOL to Object Oriented Programming, PowerBuilder to C#, Hierarquical Databases to Relational Databases are just the kind of challenges we have faced in our research project.

Not everything is easy, and we might not be able to automate all the tasks (commonly due to the cost of implementing the automation not becuase of feasability).

But at the end Could you understand the whole novel?, even if you didn't understand the joke in the one of the paragraphs in the page?

My years of reading make be belive that you can.