WheWhen you are doing an azure migration, one of the first thing you must do is

collect all the information you can about your database.

Also at some point in your migration process you might consider between migration to

SQL Azure or Azure Storage or Azure Tables.

Do do all the appropriate decisions you need to collect at least basic data like:

- Database Size

- Table Size

- Row Size

- User Defined Types or any other code that depends on the CLR

- Extended Properties

Database Size

You can use a script like this to collect some general information:

create table #spaceused(

databasename varchar(255),

size varchar(255),

owner varchar(255),

dbid int,

created varchar(255),

status varchar(255),

level int)

insert #spaceused (databasename , size,owner,dbid,created,status, level) exec sp_helpdb

select * from #spaceused for xml raw

drop table #spaceused

When you run this script you will get an XML like:

<row databasename="master"

size=" 33.69 MB"

owner="sa"

dbid="1"

created="Apr 8 2003"

status="Status=ONLINE, ..."

level="90"/>

<row databasename="msdb"

size=" 50.50 MB"

owner="sa"

dbid="4"

created="Oct 14 2005"

status="Status=ONLINE, ..."

level="90"/>

<row databasename="mycooldb"

size=" 180.94 MB"

owner="sa"

dbid="89"

created="Apr 22 2010"

status="Status=ONLINE, ..."

level="90"/>

<row databasename="cooldb"

size=" 10.49 MB"

owner="sa"

dbid="53"

created="Jul 22 2010"

status="Status=ONLINE, ..."

level="90"/>

<row databasename="tempdb"

size=" 398.44 MB"

owner="sa" dbid="2"

created="Feb 16 2011"

status="Status=ONLINE, ..."

level="90"/>

And yes I know there are several other scripts that can give you more detailed information about your database

but this one answers simple questions like

Does my database fits in SQL Azure?

Which is an appropriate SQL Azure DB Size?

Also remember that SQL Azure is based on SQL Server 2008 (level 100).

80 = SQL Server 2000

90 = SQL Server 2005

100 = SQL Server 2008

If you are migrating from an older database (level 80 or 90) it might be necessary to upgrade first.

This post might be helpful: http://blog.scalabilityexperts.com/2008/01/28/upgrade-sql-server-2000-to-2005-or-2008/

Table Size

Table size is also important.There great script for that:

http://vyaskn.tripod.com/sp_show_biggest_tables.htm

If you plan to migrate to Azure Storage there are certain constraints. For example consider looking at the number of columns:

You can use these scripts: http://www.novicksoftware.com/udfofweek/vol2/t-sql-udf-vol-2-num-27-udf_tbl_colcounttab.htm (I just had to change the alter for create)

Row Size

I found this on a forum (thanks to Lee Dice and Michael Lee)

DECLARE @sql VARCHAR (8000)

, @tablename VARCHAR (255)

, @delim VARCHAR (3)

, @q CHAR (1)

SELECT @tablename = '{table name}'

, @q = CHAR (39)

SELECT @delim = ''

, @sql = 'SELECT '

SELECT @sql = @sql

+ @delim

+ 'ISNULL(DATALENGTH ([' + name + ']),0)'

, @delim = ' + '

FROM syscolumns

WHERE id = OBJECT_ID (@tablename)

ORDER BY colid

SELECT @sql = @sql + ' rowlength'

+ ' FROM [' + @tablename + ']'

, @sql = 'SELECT MAX (rowlength)'

+ ' FROM (' + @sql + ') rowlengths'

PRINT @sql

EXEC (@sql)

Remember to change the {table name} for the name of the table you need

User Defined Types or any other code that depends on the CLR

Just look at your db scripts at determine if there are any CREATE TYPE statements with the assembly keyword.

Also determine if CLR is enabled with a query like:

select * from sys.configurations where name = 'clr enabled'

If this query has a column value = 1 then it is enabled.

Extended Properties

Look for calls to sp_addextendedproperty dropextendedproperty OBJECTPROPERTY and sys.extended_properties in your scripts.

I had a Windows Server 2003 and I was trying to connect to Oracle with the System.Data.OracleClient provider.

I was able to connect from a console application but not from ASP.NET.

From ASP.NET I only got ORA-12154 errors.

I found that on Windows 2003 Server, ASP.NET applications run in the

security context of the “Network Service” user.

So I tried these two things:

I first started following these steps:

1. Log on to Windows as a user with Administrator privileges.

2. Launch Windows Explorer from the Start Menu and and navigate to the

ORACLE_HOME folder. It is usually under the oracle instalation folder.

In my case that is C:\oracle\product\10.2.0\client_1

3. Right-click on the ORACLE_HOME folder and choose the "Properties" option

from the drop down list. A "Properties" window should appear.

4. Click on the "Security" tab of the "Properties" window.

5. Click on "Authenticated Users" item in the "Name" list (on Windows XP

the "Name" list is called "Group or user names").

6. Uncheck the "Read and Execute" box in the "Permissions" list under the

"Allow" column (on Windows XP the "Permissions" list is called

"Permissions for Authenticated Users").

7. Re-check the "Read and Execute" box under the "Allow" column (this is

the box you just unchecked).

8. Click the "Advanced" button and in the "Permission Entries" list make

sure you see the "Authenticated Users" listed there with:

Permission = Read & Execute

Apply To = This folder, subfolders and files

If this is NOT the case, edit that line and make sure the "Apply onto"

drop-down box is set to "This folder, subfolders and files". This

should already be set properly but it is important that you verify this.

9. Click the "Ok" button until you close out all of the security properties

windows. The cursor may present the hour glass for a few seconds as it

applies the permissions you just changed to all subfolders and files.

10. Reboot your computer to assure that these changes have taken effect.

(I thought that rebooting was not that important but it seems that you have to reboot to make changes effective)

It sometimes happens that it is not enough, because it seems that some oracle installations need the

the ASP.NET process to run with an account with sufficient privileges.

The second thing you can do in that case is.

1. First open the machine.config file. That will be usually in %windir%\Microsoft.NET\Framework\v2.0.50727\CONFIG\machine.config

2. Look for something like:

<system.web>

<processModel autoConfig="true" />

3. Add the userName=”System” attribute. For example

<processModel autoConfig="true" userName="System" />

4. Restart the IIS.

Microsoft announced that they won’t support the System.Data.OracleClient anymore :(

The message says that it will still be available in .NET 4.0 but “deprecated” and that it wont impact existing applicatoions.

So what are my options?

Well you have to go to a third party. Not now but eventually.

Oracle Data Provider for .NET (ODP.NET) (Oracle states that ODP.NET is Free)

DataDirect ADO.NET Provider for Oracle

dotConnect for Oracle

Migration to ODP.NET

Installation

Microsoft OracleClient to ODP.NET Application Migration: Code Migration

Oracle provide general instructions. In summary you need to:

- Add the references to Oracle.DataAccess

- Change namespaces: System.Data.OracleClient by Oracle.DataAccess.Client; and maybe add “using Oracle.DataAccess.Types”

- Update the connection String (most attributes are the same). Change Integrated Security by "User Id=/", change “Server” attribute to “Data Source” and remove Unicode

- The following is anoying but you have to add after all OracleCommands something like OracleCommand1.BindByName = true;

Deployment

- Oracle Universal Installer (either in normal installation or Silent Install)

- XCopy (I like this :) ) just remember to download the ODAC xcopy version

If you have created any schedule jobs or you just need to see what jobs are available in a server you use the dba_jobs table.

The following links provides more details about this view: http://www.praetoriate.com/data_dictionary/dd_dba_jobs.htm

This is way a discused with a friend for migrating a VB6 RDS CreateRecordset

Private Function Foo(rs As ADOR.Recordset) As Boolean

On Error GoTo Failed

Dim ColumnInfo(0 To 1), c0(0 To 3), c1(0 To 3)

Dim auxVar As RDS.DataControl

Set auxVar = New RDS.DataControl

ColInfo(0) = Array("Value", CInt(201), CInt(1024), True) ColInfo(1) = Array("Name", CInt(129), CInt(255), True)

Set rs = auxVar.CreateRecordSet(ColumnInfo)

Foo = True

Exit Function

Failed:

Foo = False

Exit Function

End Function

According to MSDN the CreateRecordset function takes a Varriant array with definitions for the columns. This definitions are made up of four parts

| Attribute |

Description |

| Name |

Name of the column header. |

| Type |

Integer of the data type. |

| Size |

Integer of the width in characters, regardless of data type. |

| Nullability |

Boolean value. |

| Scale (Optional) |

This optional attribute defines the scale for numeric fields. If this value is not specified, numeric values will be truncated to a scale of three. Precision is not affected, but the number of digits following the decimal point will be truncated to three. |

So if we are going to migrate to System.Data.DataColumn we will used a type translation like the following (for now I’m just putting some simple cases)

| Length |

Constant |

Number |

DataColumn Type |

| Fixed |

adTinyInt |

16 |

typeof(byte) |

| Fixed |

adSmallInt |

2 |

typeof(short) |

| Fixed |

adInteger |

3 |

typeof(int) |

| Fixed |

adBigInt |

20 |

|

| Fixed |

adUnsignedTinyInt |

17 |

|

| Fixed |

adUnsignedSmallInt |

18 |

|

| Fixed |

adUnsignedInt |

19 |

|

| Fixed |

adUnsignedBigInt |

21 |

|

| Fixed |

adSingle |

4 |

|

| Fixed |

adDouble |

5 |

|

| Fixed |

adCurrency |

6 |

|

| Fixed |

adDecimal |

14 |

|

| Fixed |

adNumeric |

131 |

|

| Fixed |

adBoolean |

11 |

|

| Fixed |

adError |

10 |

|

| Fixed |

adGuid |

72 |

typeof(System.Guid) |

| Fixed |

adDate |

7 |

Typeof(System.DateTime) |

| Fixed |

adDBDate |

133 |

|

| Fixed |

adDBTime |

134 |

|

| Fixed |

adDBTimestamp |

135 |

|

| Variable |

adBSTR |

8 |

|

| Variable |

adChar |

129 |

typeof(string) |

| Variable |

adVarChar |

200 |

typeof(string) |

| Variable |

adLongVarChar |

201 |

typeof(string) |

| Variable |

adWChar |

130 |

|

| Variable |

adVarWChar |

202 |

|

| Variable |

adLongVarWChar |

203 |

|

| Variable |

adBinary |

128 |

|

| Variable |

adVarBinary |

204 |

|

| Variable |

adLongVarBinary |

205 |

|

So the final code can be something like this: private bool Foo(DataSet rs)

{

try

{

DataColumn dtCol1 = new DataColumn("Value",typeof(string));

dtCol1.AllowDBNull = true;

dtCol1.MaxLength = 1024;

DataColumn dtCol2 = new DataColumn("Name",typeof(string));dtCol2.AllowDBNull = true;

dtCol2.MaxLength = 255;

DataTable dt = rs.Tables.Add();

dt.Columns.Add(dtCol1);

dt.Columns.Add(dtCol2);

return true;

}

catch

{

return false;

}

}

NOTES:

My friend Esteban also told my that I can use C# 3 syntax and write something even cooler like:

DataColumn dtCol1 = new DataColumn()

{

ColumnName = "Value",

DataType = typeof (string),

AllowDBNull = true,

MaxLength = 1024

};

This code is so handy that I'm posting it just to remember. I preffer to serialize my datasets as attributes instead of elements. And its just a matter of using a setting. See:

Dim cnPubs As New SqlConnection("Data Source=<servername>;user id=<username>;" & _

"password=<password>;Initial Catalog=Pubs;")

Dim daAuthors As New SqlDataAdapter("Select * from Authors", cnPubs)

Dim ds As New DataSet()

cnPubs.Open()

daAuthors.Fill(ds, "Authors")

Dim dc As DataColumn

For Each dc In ds.Tables("Authors").Columns

dc.ColumnMapping = MappingType.Attribute

Next

ds.WriteXml("c:\Authors.xml")

Console.WriteLine("Completed writing XML file, using a DataSet")

Console.Read()

Recently a friend at work had a problem querying a SQL server that indicated

that the column name was wrong.

The only thing wrong was the the case. For example he had COLUMN1 instead of

Column1. I had never seen that problem in SQLServer.

I had seed that in Sybase but not in SQLServer. He solved that by changing the

database collating sequence to something like this:

alter database database1 collate

SQL_Latin1_General_CP1_CI_AI

the CI in the collating indicates Case Insensitive

For more information on SQL Server collations check:

http://msdn2.microsoft.com/en-us/library/aa258233(SQL.80).aspx

And you determine your current database collation use a code like this:

USE

yourdb>

GO

print

'My

database ['

+

db_name()

+

']

collation is: '

+

cast(

DATABASEPROPERTYEX

(

db_name(),

N'Collation'

)

as

varchar(128)

)

print

'My tempdb

database collation is: '

+

cast(

DATABASEPROPERTYEX

(

'tempdb',

N'Collation'

)

as

varchar(128)

)

Source IP for connected applications in DB2

Usually when there are performance problems you need to

track who is connected to the database. Especially in development environments

because production environments are more restricted with who has access to the

DB.

You can easily list the applications connected to your DB2

database using the control center application or from the command line.

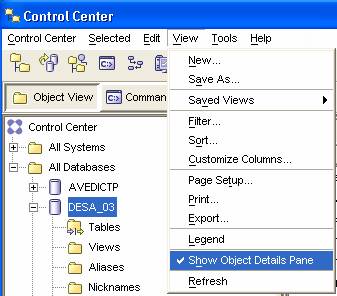

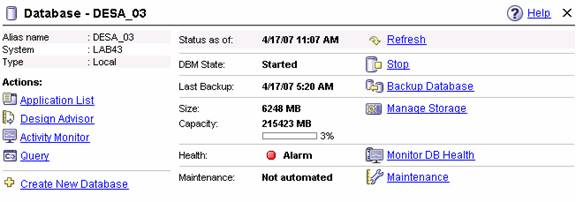

From the control center:

- First

check that the Objects Detail Pane is active

- In the

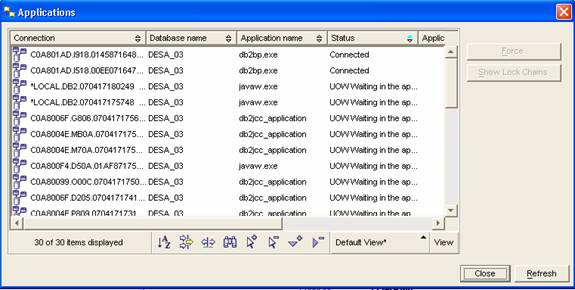

Objects Details Pane, click the application list link

- This

will open a window like this:

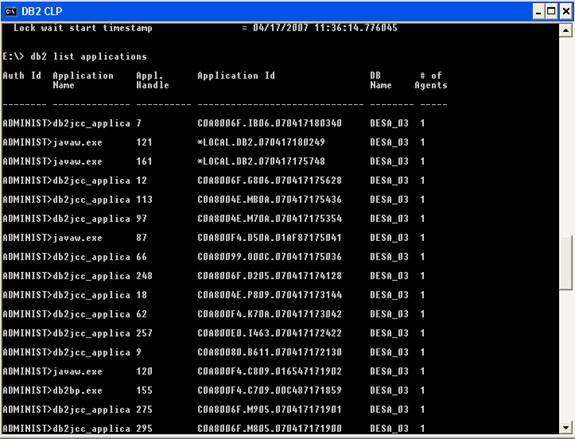

From the command window just do something like:

DB2 LIST APPLICATIONS

Now to get the real juicy and useful information execute

from the command line:

DB2 GET SNAPSHOT FOR APPLICATION AGENTID ###

And use the number that is under the Appl.

Handle column, this will show the ip address and

other info.

The idea was to create a harry potter like title jeje.

Today I had a new issue with DB2 (everyday you learn something new).

I got to work and we had some tables that you could not query or do anything. The system reported something like:

SQL0668N Operation not allowed for reason code "1" on table "MYSCHEMA.MYTABLE".

SQLSTATE=57016

So I started looking what is an 57016 code????

After some googling I found that the table was in an "unavailable state". OK!!

But how did it got there? Well that I still I'm not certain. And the most important. How do I get it out of that state?????

Well I found that the magic spell is somehting like

>db2 set integrity for myschema.mytable immediate checked

After that statement everything works like a charm.

DB2 Docs state that:

"Consider the statement:

SET INTEGRITY FOR T IMMEDIATE CHECKED

Situations in which the system will require a full refresh, or will check the whole table

for integrity (the INCREMENTAL option cannot be specified) are:

- When new constraints have been added to T itself

- When a LOAD REPLACE operation against T, it parents, or its underlying tables has taken place

- When the NOT LOGGED INITIALLY WITH EMPTY TABLE option has been activated after the last

integrity check on T, its parents, or its underlying tables

- The cascading effect of full processing, when any parent of T (or underlying table,

if T is a materialized query table or a staging table) has been checked for integrity

non-incrementally

- If the table was in check pending state before migration, full processing is required

the first time the table is checked for integrity after migration

- If the table space containing the table or its parent (or underlying table of a materialized query

table or a staging table) has been rolled forward to a point in time, and the table and its

parent (or underlying table if the table is a materialized query table or a staging table) reside

in different table spaces

- When T is a materialized query table, and a LOAD REPLACE or LOAD INSERT operation directly into T

has taken place after the last refresh"

But I really dont know what happened with my table.

Hope this help you out.