La idea de este blog va a ser contar esos detalles nimios que colorean todo proyecto de desarrollo. Y no es cualquier proyecto, sino que este va a puro primerizo: mi primer proyecto como ScrumMaster y el primero utilizando Scrum en Artinsoft. Qué puedo decir? Basicamente todo aquello que no puedo. En principio voy a contar poco y nada sobre el producto en si. Lo segundo es más polémico: nombres. Voy a usar iniciales para referirme al resto de los integrantes del grupo de trabajo. Pero esí, chismosos del mundo, a no ilusionarse. La idea no es sacar trapitos al sol, sino simplemente dejar asentado qué pasa en esta oficina...si puedo, día por día.

Product Managers are among the most important --and influencing stakeholders in a migration project. Actually, they are the “owners” of the application that is being migrated and because of this, they may be reluctant to approve changes to the application.

In many cases, it will not be possible to reach the state of Functional Equivalence in a target platform with 100% the same user interface features as the original application. For example, Tab Controls in Visual Basic 6.0 don’t respond to click events when they are disabled, so the user cannot see the contents of the tab. In Visual Basic .NET, tab controls still respond to click events when they are disabled, so they display their contents in a disabled state. This visual difference is intrinsic to the way the .NET control behaves, and therefore it should be regarded as an expected change after the migration.

Just like the one I mentioned, there may be several changes in the application as part of the migration process. It is very important to make Product Managers aware of these expected changes, and have their approval before the changes are made. Product Managers will have great degree of influence on your migration project, so do your best to keep them on your side!

Before I get started on this blog post, allow me to say that this is something that you should never do in a production environment. That being said, when working with the Administrative Web Interface of Virtual Server, it will prompt you for username/password when you start the browser session and when you want to use VMRC to control a VM:

See that "Remember my password" checkbox? Well, it has never worked for us, and according to David Wang, this is an issue with the Virtual Server's Administrative Web Interface being accessed not as an Intranet site but rather as an Internet site. I tried messing with the security settings of my trusted site to allow the use of the currently logged on user on the sites - this lead me nowhere as the web site would not even show up.

I then proceeded to mess with some settings with IIS and I have achieved my purpose: no more password input every time I need to VMRC! . It is worth mentioning again that this is something I am doing on an isolated machine that we use for testing purposes - never, ever do this on a production machine!!!

Here is what you need to do:

- Access the IIS Control Panel

- Expand the following nodes: Virtual Server --> VirtualServer

- Right click on the VirtualServer node and select Properties

- Click on the Directory Security Tab

- Click the Edit button under Authentication and access control

- Mark the check labeled "Enable anonymous access"

- Enter a username/password combination that is allowed to access Virtual Server

So far what you have done will allow you to access Virtual Servers main Administrative interface. If you want to skip the whole username/password issue when using VMRC, you must change some settings in IE:

- Access Internet Options from within IE

- Select the Trusted Sites Zone

- Click on Custom level

- Scroll all the way to the bottom and under User Authentication--> Logon, select "Automatic logon with current user name and password"

- Close the dialogs, restart IE

Once you do this, you will be able to access the Administrative Web Interface as the user you specified above. Nifty trick for testing but a big no-no for any other scenario.

The first step is to download the actual VM Additions. You can read more about the Linux VM Additions here. To download the additions, you need to log into Microsoft Connect, and the look in the available programs for the Virtual Machine Additions for Linux. Once you download and install the file VMAdditionsForLinux32Bit.MSI, you will have an additional ISO file under C:\Program Files\Microsoft Virtual Server\Virtual Machine Additions\, called VMAdditionsForLinux.iso. This ISO file will appear on the Known image files on the virtual machine’s CD/DVD Drive properties:

Once you mount the ISO, installing the additions is straightforward. You only need to change the directory to the CD-ROM drive from a terminal (in this case, /media/cdrom), and run the script vmadd-install.run. This script takes several parameters, depending on what part of the additions you wish to install. You can, for example, only install the SCSI driver, or the X11 driver, or any other component. I installed all of them using the command line ./vmadd-install.run all:

You can also install the additions using the RPM packages, if your distro supports it. Once the additions were installed, the VM worked like a charm. The mouse integration works perfectly, and the performance increase is noticeable.

As suggested in this post by Christian, I went ahead and downloaded the vmdk2vhd utility to convert virtual hard drives from VMWare’s VMDK format to Microsoft’s VHD format. To test it out, I also downloaded Red Hat Enterprise Linux trial virtual appliance from VMWare’s site, and ran the converter on it. These are my results.

First, running the converter is pretty straightforward. You just launch the utility, and it presents you with a simple UI, where you select the vmdk you want to convert, and the path to the destination VHD.

Once you select the vmdk and the vhd, press the convert button to start the conversion process:

The tool shows you a dialog box when it completes the conversion:

I created a new virtual machine in Virtual Server 2005 R2 SP1 Beta 2 using this newly converted VHD, and, not knowing what to expect, I started it. The redhat OS started the boot up process normally:

After making some adjustments to the configuration, including the X Server configuration, I finally got the X Windows server to come up. I am currently playing with the OS, and will proceed to install the VM Additions … I will document the process in another post soon.

As you can see, the process for converting a VMWare virtual hard disk to a Virtual Server VHd is very straightforward. This will work, ideally, in a migration scenario, but this also enables a scenario to try out the large amount of Virtual Appliances that you can download from VMWare’s website.

Virtual Server 2005 R2 SP1 Beta 2 includes VHD offline mounting, which can be very useful to read the contents of a virtual hard drive from the host, without having to load a virtual machine to do the job.

It might be useful from time to time for some people to have the same feature for Virtual Floppy Disks (.vfd) files. Just to give you an example, some tools depend on floppy disks to create backups that you may be interested on accessing from a physical machine.

This feature is not available with Virtual Server and it probably won't be (I don't think Microsoft would consider this a priority). But if you ever happen to need this, Ken Kato has created a Virtual Floppy Driver that you can use. It lets you view, edit, rename, delete or create files on a virtual floppy disk.

If you browse around the site you will also find a lot of VMWare tools that you might find useful.

If the tasks that your application carries out are independent of each other, a way to optimize things is to create threads for various tasks. There are many ways to thread applications including Pthreads, windows threading, and recently OpenMP. OpenMP excels in the sense that it can make your application multi-threaded by just writing a few pragmas here and there.

Once you multi-thread your application, many things can go astray. For instance, different threads can access variables at different intervals in a loop, which can only lead to disastrous results in your calculations. You can break your head and lose some of your sanity by manually debugging what is going wrong with your application or you can use Intel's thread checker to find out what is going on. For instance, the following sceenshot shows you the output of all the problems (referred to as data races) when various threads were accessing variables and changing them on each loop:

Furthermore, your application's threads might starve waiting for a particular resource to be freed, which can only make the whole multi-threading effort futile. Tools like Intel's thread profiler can help you find this info. For example, after data collection, based on the following screenshot, you can pretty much tell that due to locks in the code, the threads are pretty much stalling the application:

Now that you know what these two tools are and what they do, what I wanted to show you was how to get around the fact that none of these tools can be installed on a Itanium box. That is, now that the Itanium is dual-core, how do you go about optimizing multi-threaded apps using these tools? The solution to this problem will be included in the second part of this post, stay tuned!

The idea was to create a harry potter like title jeje.

Today I had a new issue with DB2 (everyday you learn something new).

I got to work and we had some tables that you could not query or do anything. The system reported something like:

SQL0668N Operation not allowed for reason code "1" on table "MYSCHEMA.MYTABLE".

SQLSTATE=57016

So I started looking what is an 57016 code????

After some googling I found that the table was in an "unavailable state". OK!!

But how did it got there? Well that I still I'm not certain. And the most important. How do I get it out of that state?????

Well I found that the magic spell is somehting like

>db2 set integrity for myschema.mytable immediate checked

After that statement everything works like a charm.

DB2 Docs state that:

"Consider the statement:

SET INTEGRITY FOR T IMMEDIATE CHECKED

Situations in which the system will require a full refresh, or will check the whole table

for integrity (the INCREMENTAL option cannot be specified) are:

- When new constraints have been added to T itself

- When a LOAD REPLACE operation against T, it parents, or its underlying tables has taken place

- When the NOT LOGGED INITIALLY WITH EMPTY TABLE option has been activated after the last

integrity check on T, its parents, or its underlying tables

- The cascading effect of full processing, when any parent of T (or underlying table,

if T is a materialized query table or a staging table) has been checked for integrity

non-incrementally

- If the table was in check pending state before migration, full processing is required

the first time the table is checked for integrity after migration

- If the table space containing the table or its parent (or underlying table of a materialized query

table or a staging table) has been rolled forward to a point in time, and the table and its

parent (or underlying table if the table is a materialized query table or a staging table) reside

in different table spaces

- When T is a materialized query table, and a LOAD REPLACE or LOAD INSERT operation directly into T

has taken place after the last refresh"

But I really dont know what happened with my table.

Hope this help you out.

If you are familiar with Virtual Server you know that you can use it to run scripts on the Host OS by attaching them to events, such as: turning on or off the Virtual Server service, or turning on or off a virtual machine.

But these scripts run on the host. What if you want to launch an application inside the guest OS?

Suppose you have a VBScript file called MyVBScript.vbs, and you want to run it inside the guest operating system of a virtual machine, or a bunch of them. You can create an ISO image that will contain an Autorun configuration that will execute this script. Once that's done, you can simply attach the ISO file to the virtual machine(s) where you want your script to run.

We will use the unauthorized ISO Recorder Power Toy to create the ISO file. ISO Recorder v2.0 works on Windows XP and Windows 2003. After you install this application you will be able to create ISO files out of folders in your filesystem.

- Create a new folder. Let's call it VMScript.

- Copy MyVBScript.vbs to the VMScript folder.

- Create a new file in the folder called Autorun.inf.

- Edit the Autorun.inf file and add the following lines:

[autorun]

open=wscript MyVBScript.vbs

- You can replace the text after "open=" if you want to execute a different command line, you can even launch an application with a UI.

- Save the Autorun.inf file.

- Right clik on the VMScript folder and select Create ISO image file.

- Follow the wizard steps and a new file called RemoteScript.iso will be created. This is the CD/DVD image that you can attach to the virtual machine where you want the script to run. When you do, unless the Autorun feature has been disabled, your script will launch in the guest OS.

This is the same technique that Microsoft uses to launch the VM Additions and the precompactor. Now you can exploit this and even create interesting combinations. For instance you can attach a script to an event in Virtual Server that will attach your ISO file to a virtual machine.

IMPORTANT NOTES:

- ISO Recorder is meant to be used for personal use, but it is used by a number of companies around the world. I have found no problems with it. Make sure you read the license agreement and documentation for this application.

- AutoRun is normally enabled by default on Windows operating systems. For more information on this read CD AutoRun basics.

- If you are not that familiar with Virtual Server and you don't know how to attach ISO files, manage removable media or attach scripts to Virtual Server, read the Virtual Server Administrator's Guide included in the installation package.

Juan Pastor recently published an article that analyzes the risk of having mission critical applications running on non supported software (does it sound like VB6?). Will you get in trouble with Sarbanes-Oxley? Read on and you'll find out. However, let me just ask you this: Why should you take the risk?

-------

The white paper considers the latest developments regarding SOX compliance and explains how organizations can ensure ongoing certification by migrating their legacy finance applications to a modern IT platform.

Link to Migrating-Away-From-Compliance-Quicksand

Active Directory is also LDAP. So to integrate with the Active Directory you can use code very similar to that one used with other LDAP servers.

To connect to the Active Directory you can do something like this:

import java.util.Hashtable;

import

javax.naming.Context;

import

javax.naming.NamingException;

import

javax.naming.directory.DirContext;

import

javax.naming.directory.InitialDirContext;

/**

* @author mrojas

*/

public class Test1

{

public static void main(String[] args)

{

Hashtable

environment = new Hashtable();

//Just

change your user here

String

myUser = "myUser";

//Just change your user password here

String

myPassword = "myUser";

//Just

change your domain name here

String

myDomain = "myDomain";

//Host

name or IP

String

myActiveDirectoryServer = "192.168.0.20";

environment.put(Context.INITIAL_CONTEXT_FACTORY,

"com.sun.jndi.ldap.LdapCtxFactory");

environment.put(Context.PROVIDER_URL,

"ldap://" + myActiveDirectoryServer

+ ":389");

environment.put(Context.SECURITY_AUTHENTICATION,

"simple");

environment.put(Context.SECURITY_PRINCIPAL,

"CN=" + myUser + ",CN=Users,DC=" + myDomain + ",DC=COM");

environment.put(Context.SECURITY_CREDENTIALS,

myPassword);

try

{

DirContext context = new InitialDirContext(environment);

System.out.println("Lluvia de exitos!!");

}

catch

(NamingException e)

{

e.printStackTrace();

}

}

}

These days I have been testing all of our 64-bit labs on a

Montecito (Itanium) box that was lent to use by HP. The newest feature of

this new chip is the fact that it has two cores, which should make

multi-threaded applications perform a lot faster. I have not developed in

a while for the Itanium, and one of the first things that struck me was the

fact that there is no "Intel Compiler Installer" available for download.

As of version 9.1.028, the compiler installer includes compilers for x86, x64,

and IA64 - sweet.

While following my usual install procedure (click next until the installer

finishes) I noticed that my IA64 compiler was completely broken - even when

compiling a Hello World. The error I got was the following:

icl: internal error: Assertion failed (shared/driver/drvutils.c, line 535)

After reading some forums which did not hint me at all what the problem was, I

decided to re-install but this time around reading the installer

screens. It happens that one of the requirements for this compiler

to work is that it needs the PSDK from Microsoft installed, something that I

had completely forgotten to do. Once I installed the PSDK, the IA64

compiler was a happy camper and everything worked OK. Hopefully, some

desperate soul will be able to find this info if they ever face that dreaded

message.

How do we appropriately represent Web pages for semantic analysis and particularly for refactoring purposes? In a previous post at this same place, we introduced some of the main principles of what we have called semantically driven rational refactoring of Web pages as a general framework of symbolic reasoning. At this post, we want to develop some preliminary but formally appealing refinement concerning page representation; we assume our previous post has been read and we put ourselves in its context: development of automated tools pointing to the task of refactoring as a goal.

We claim that many valuable derivations can be obtained by following a semantic approach to the handling of web pages those of which are both of conceptual and implementation nature and interest; we believe looking with more detail at such derivations may provide us with more evidence for sustaining our claim on semantics in the context of refactoring. In this post, we want to consider one very specific case of conceptual derivation with respect to the representation model. Specifically, we want to present some intuitive criteria for analyzing theoretically a symbolic interpretation of the popular k-means clustering scheme, which is considered useful for the domain of web pages in more general contexts.

Let us first and briefly remain why that is relevant to refactoring, to set up a general context. We recall that refactoring is often related to code understanding; hence building a useful semantic classification of data, in general, is a first step in the right direction. In our case web pages are our data sources, we want to be able to induce semantic patterns inside this kind of software elements, in a similar way as search engines do to classify pages (however, we had to expect that specific semantic criteria are probably being more involving in our refactoring case where, for instance, procedural and implementation features are especially relevant; we will expose such criteria in future posts).

In any case, it is natural to consider clustering of pages according to some given semantic criteria as an important piece in our refactoring context: Clustering is essence about (dis)similarity between data, is consequently very important in relation to common refactoring operations, especially those ones aiming at code redundancy. For instance, two similar code snippets in some set of web pages may become clustered together, allowing by this way an abstraction and refactoring of a "web control" or similar reusable element.

As several authors have done in other contexts, we may use a symbolic representation model of a page; in a general fashion pages can naturally be represented by means of so-called symbolic objects. For instance, an initial approximation of a page (in an XML notion) can be something of the form given below (where relevant page attributes (or features) like "title" and "content" can be directly extracted from the HTML):

<rawPage

title="Artinsoft home page"

content="ArtinSoft, the industry leader in legacy application migration and software upgrade technologies, provides the simple path to .NET and Java from a broad range of languages and platforms, such as Informix 4GL and Visual Basic."

.../>

Because this representation is not as such effortless for calculation purposes, attribute values need to be scaled from plain text into a more structured basis, for clustering and other similar purposes. One way is to translate each attribute value in numerical frequencies (e.g. histogram) according to the relative uses of normalized keywords taken from a given vocabulary (like "software", "legacy", "java", "software", etc) by using standard NLP techniques. After scaling our page could be probably something similar to the following:

<scaledPage

title ="artinsoft:0.95; software:0.00; java:0.00; "

content="artinsoft:0.93; software:0.90; java:0.75; " .../>

The attribute groups values are denoting keywords and its relative frequency in page (data is hypothetic in this simple example), as usual. Notice the attribute group is another form of symbolic object whose values are already numbers. In fact, we may build another completely flat symbolic object where attribute values are all scalar, in an obvious way:

<flatPage

title.artinsoft="0.95" title.software="0.00" title.java="0.00"

content.artinsoft="0.93" content.software="0.90" content.java="0.75" .../>

Now pages that are by this way related to some common keywords set can be easily compared using some appropriate numerical distance. Of course, more complex and sophisticated representations are feasible, but numerically scaling the symbolic objects remains as an important need at some final stage of the calculation. However, and this is a key point, many important aspects of the computational process can be modeled independently of such final numerical representation (at the side of the eventual explosion of low-level attributes or poorly dense long vectors of flat representations, which are not the point here).

Let us develop more on that considering the k-means clustering scheme but without committing it to any specific numerically valued scale, at least not too early. That is, we want to keep the essence of the algorithm so that it works for "pure" symbolic objects. In a simplified way, a (hard) k-means procedure works as follows:

1.Given are a finite set of objects D and an integer parameter k.

2.Choose k objects from D representing the initial group of cluster leaders or centroids.

3.Assign each object in D to the group that has the closest centroid.

4.When all objects in D have been assigned, recalculate the k centroids.

5.Repeat Steps 2 and 3 until the centroids no longer change (move).

Essential parameters to the algorithm are 1) the distance function (of step 3) to compute the closest object and some form of operation allowing "object combination" in order to produce the centroids (step 4) (in the numerical case "combination" calculates the mean vector from vectors in cluster).

Such a combination needs to be "consistent" with the distance to assure the eventual termination (not necessarily global optimality). We pursue to state a formal criterion of consistency in an abstract way. Fundamental for assuring termination is that every assignment of one object X to a new cluster occurs with lower distance with respect to the new cluster leader than the distance to the previous clusters leaders of X. In other words the assignment of X must be a really new one. Combine operation after assignment (step 4) must guarantee that in every old cluster where X was, their leaders still remain more distant after future recalculations. So we need abstract definitions of distance and object combination would satisfy these invariant, that we call consistency.

To propose a distance is usually easier (in analogy to the vector model), objects are supposed to belong to some class and the feature values belong to some space or domain provided with a metric. For instance, an attribute like "title" takes values over a "String" domain where several numerical more o less useful metrics can possibly be defined. For more structured domains, built up from metric spaces, we may homomorphically compose individual metrics of parts for building the metric of the composed domain. For a very simplified example, if we have two "page" objects X and Y of the following form:

X= <page>

<title>DXT</title>

<content>DXC</content>

</page>

Y= <page>

<title>DYT</title>

<content>DYC</content>

</page>

Where we have that DXT and DYT are eventually complex elements representing symbolic objects over a metric domain DT provided with distance dist_DT and similarly DXC, DYC comes from a metric space DC with metric dist_DC. We may induce a metric dist_page for the domain “page”, as linear combination (where weights for dimensions could also be considered):

dist_page(X, Y) = dist_DT(DXT, DYT) + dist_DC(DXC, DYC)

Using the simple illustration, we see that we may proceed in a similar fashion for the combine_page operation, assuming operations combine_DT and combine_DC. In such a case, we have:

combine_page(X,Y)= <page>

<title>combine_DT(DXT,DYT)</title>

<content>combine_DC(DXC,DYC)</content>

</page>

It is straightforward to formulate the general case, the principle is simple enough. It is worth to mention that "combine" is clearly a kind of abstract unification operation; in general, we claim has to be consistent with the "distance" function (at every domain); further requirements rules this operation, in particular, that the domain needs to be combination-complete or combination-dense.

Proving consistency strongly relies on abstract properties like the following:

dist_D(X, Y) >= dist_D(combine_D(X,Y), Y)

In other words, that "combine" actually comprises according to distance. Fortunately, by using homomorphic compositions of consistent domains, we recover consistency at the composed domain. At the scalar level, for instance with numbers, using average as "combination" operation easily assures consistency.

This week I finally manage to sit down and start using the 1.0 release of PowerShell. So far I’m impressed with its capabilities and ease of use. Here are a couple of tips to get you started with PowerShell scripting:

Get-Help

The commands in PowerShell follow the format verb-noun. It is very easy to “guess” a command once you’ve been using PS for a little while. To get the processes on the system, for example, you use the command get-process. To get the contents of a file you use get-contents -path . An so on.

So, guess what command is used to get help? Too easy, isn’t it? get-help is your greatest ally when working with PowerShell. If you are unsure of what a command does or how it works, you just need to invoke the get-help command, followed by the name of the command:

PS C:\> get-help get-process

NAME

Get-Process

SYNOPSIS

Gets the processes that are running on the local computer.

…

Also, get-help supports wildcards, so you can use it to search for commands (in case you’re not 100% positive on the exact command name). So, for example, you can do a:

PS C:\> get-help get-*

Name Category Synopsis

---- -------- --------

Get-Command Cmdlet Gets basic information about cmdlets...

Get-Help Cmdlet Displays information about Windows P...

Get-History Cmdlet Gets a list of the commands entered ...

…

Script Security

PowerShell scripts have the extension *.ps1. To execute scripts, you need to do two things:

- Enable script execution. This is achieved with the command Set-ExecutionPolicy. To learn more about this command, you can type:

PS C:\> get-help set-executionPolicy

- Enter the path to the script. If it is in the local directory, enter .\.ps1. If not, enter the fully qualified path.

Scripting in PowerShell is very easy – the syntax is very C#-like, and it has the power of both the .NET Framework AND COM objects at your disposal.

Use .NET Data Types

PowerShell is built on top of the .NET Framework. Because of that, you can use .NET Data Types freely in your scripts and in the command prompt. You can do things like:

PS C:\> $date = new-object -typeName System.DateTime

PS C:\> $date.get_Date()

Monday, January 01, 0001 12:00:00 AM

Further help

Some great places to get you started with Powershell are:

Windows PowerShell Team blog

Technet’s PowerShell Scripting Center

Just PowerShell it

PowerShell For Fun

Don’t forget to get the Windows PowerShell Help Tool, for free, from Sapien’s website.

I found a super interesting blog called

Busy ColdFusion Developers' Guide to HSSF Features

http://www.d-ross.org/index.cfm?objectid=9C65ECEC-508B-E116-6F8A9F878188D7CA

HSSF is part of an open source java project that provides FREE OPEN SOURCE libraries to create Microsoft Office Files like Microsoft Word or Microsoft Excel.

So I was wondering could it be possible with Coldfusion to use those libraries. Specially now that Coldfusion MX relies heavily in Java. So I found this wonderfull blog that explains it all.

It just went to the HSSF download site:

http://www.apache.org/dyn/closer.cgi/jakarta/poi/

The project that holds these libraries is called POI.

When you enter in those links there is a release directory and a bin directory. I downloaded the zip file and you will find three files like: poi-Version-Date.jar; poi-contrib-Version-Date.jar and poi-scratchpad-Version-Date.jar

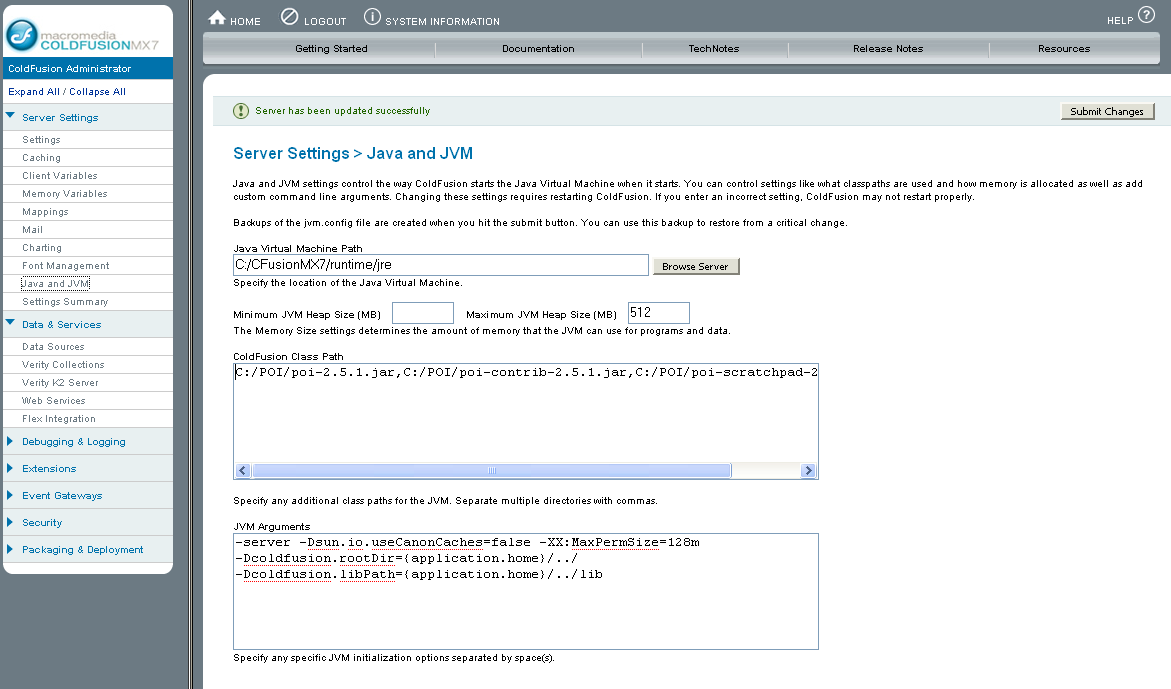

I downloaded the 2.5.1-final version. I renamed my jars to poi-2.5.1.jar, poi-2.5.1-contrib.jar and poi-2.5.1-scratchpad.jar because my memory cannot hold those complex names for a long time. I installed the library in C:\POI and put them in the Coldfusion Administrator classpath

And then I created a test file like the following:

Note: I used a recommendation from Cristial Cantrell http://weblogs.macromedia.com/cantrell/archives/2003/06/using_coldfusio.cfm

<cfscript>

context = getPageContext();

context.setFlushOutput(false);

response = context.getResponse().getResponse();

response.setContentType("application/vnd.ms-excel");

response.setHeader("Content-Disposition","attachment; filename=unknown.xls");

out = response.getOutputStream();

</cfscript>

<cfset wb = createObject("java","org.apache.poi.hssf.usermodel.HSSFWorkbook").init()/>

<cfset format = wb.createDataFormat()/>

<cfset sheet = wb.createSheet("new sheet")/>

<cfset cellStyle = wb.createCellStyle()/>

<!--- Take formats from: http://jakarta.apache.org/poi/apidocs/org/apache/poi/hssf/usermodel/HSSFDataFormat.html --->

<cfset cellStyle.setDataFormat(createObject("java","org.apache.poi.hssf.usermodel.HSSFDataFormat").getBuiltinFormat("0.00"))/>

<cfloop index = "LoopCount" from = "0" to = "100">

<!--- Create a row and put some cells in it. Rows are 0 based. --->

<cfset row = sheet.createRow(javacast("int",LoopCount))/>

<!--- Create a cell and put a value in it --->

<cfset cell = row.createCell(0)/>

<cfset cell.setCellType( 0)/>

<cfset cell.setCellValue(javacast("int",1))/>

<!--- Or do it on one line. --->

<cfset cell2 = row.createCell(1)/>

<cfset cell2.setCellStyle(cellStyle)/>

<cfset cell2.setCellValue(javacast("double","1.223452345342"))/>

<cfset row.createCell(2).setCellValue("This is a string")/>

<cfset row.createCell(3).setCellValue(true)/>

</cfloop>

<cfset wb.write(out)/>

<cfset wb.write(fileOut)/>

<cfset fileOut.close()/>

<cfset out.flush()/>

We have mentioned several times that if you can use SCSI for your VMs, then you should use it. In order to practice what we preach, I downloaded a VHD from the VHD Test Drive program (Windows Server Pro 2003) and hooked it up to a SCSI adapter and booted the machine. It was unpleasantly greeted by the dreaded Blue Screen of Death when booting. As far as the reason why it happens, I really cannot tell. Perhaps it has to do something with the VHD being syspreped and whatnot, but the purpose if this entry is not to tell you why it happens but rather how to get around it.

What I did was that I connected the VM to a virtual IDE controller and booted. I let the sysprep process finish and once I had a Windows Server 2003 logon screen, I turned off the VM. In the configuration screen of Virtual Server, I added a SCSI adapter and then added the VHD to an empty device:

Once this was set, the VM booted without any issues.

Virtual PC 2007 has moved to the Release Candidate stage. You can download it from here.

You can also check out the new features of this release on the Virtual PC Guy's WebLog .

If you had a holiday rush as some of us did maybe you missed some news about Visual Studio Service pack 1 being release.

Scott Guthrie (asp.net, iss, product manager at Microsoft) release today on his blog some really valuable information about this. He points to really good links like

But also more useful information, check it out the whole post:

A few VS 2005 SP1 Links and Information Nuggets

There are many ways to post blogs. You can use the web interface that most blog applications (blogger, community server, wordpress, etc.) offer. This can be very cumbersome and if you hit back by mistake on your browser, then all that you have typed can be gone in a matter of seconds.

I have recently stumbled on some (free) tools that will allow you to blog like a pro. The first one is called Live Writer Beta and is made by Microsoft - I found out about this from Volker's blog today. So far it has been very stable and is very easy to use. It is basically the same thing as using Word. This will get you covered as far as blogging goes, but if you want to add images and such (something our Community Server blog currently does not offer) you will need additional software.

To insert images, get the Flickr4Writer plugin, which will allow you insert images on your blog that are linked from Flickr account:

I am currently uploading my images to flickr using this (OS X) widget, I am pretty sure there is something similar for Vista if you look around (drop me a line if you find one).

Happy Blogging!

Over at IBM developerworks there is an overview of virtualization technologies, starting with the history of virtualization, virtualization methods, and some more information on the current status of virtualization in the IT industry. The article focuses on Linux Virtualization solutions, but the information it contains and the background it gives is worth a read.

Link: Virtual Linux.